AI in Software Development 2025: From Exploration to Accountability – Survey-Based Analysis

For the second year in a row, Techreviewer conducts an online survey on the role of AI in software development. We leverage our global network of software development providers to capture how Artificial Intelligence adoption is evolving across teams, geographies, and company sizes. Our previous survey in 2024 is accessible here: Impact of AI in Software Development, A Survey-Based Analysis 2024.

In 2024, artificial intelligence in software development was primarily characterized by experimentation and companies that carefully attempted to implement AI. Companies tested tools, explored use cases, and evaluated the impact, often with very distinguished strategies and limited internal expertise. Developers, in turn, wondered, "Will programmers be replaced by AI?".

The 2025 survey revisits the landscape with a sharper focus. It explores how Artificial Intelligence adoption has evolved over the past year: what's working, where companies and developers are struggling, and how attitudes, practices, and capabilities are maturing. With responses from a globally diverse group of professionals, this research offers a comprehensive snapshot of where the industry stands today and where it's heading next.

Executive Summary

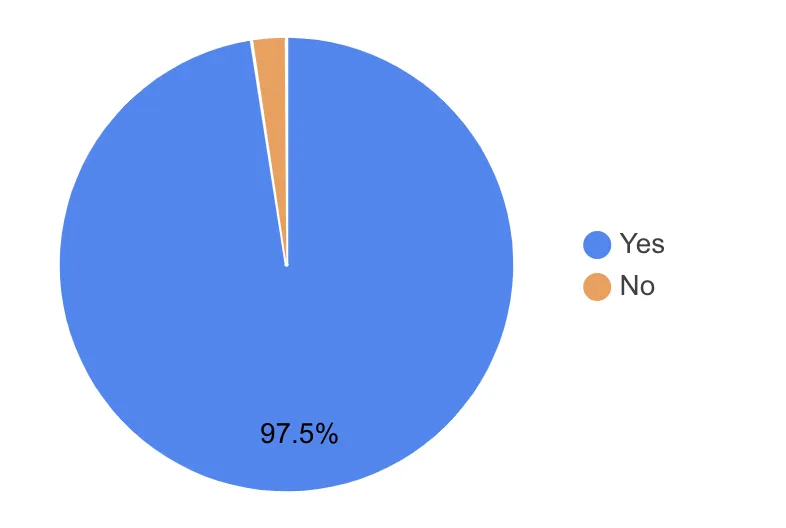

- In 2025, the attitude toward artificial intelligence has changed from an experimental approach to a regular day-to-day practice. The rate of adoption has reached 97.5% across companies of all sizes, making artificial intelligence an integral part of internal processes for any software development provider.

- Key use cases include code generation, documentation generation, code review and optimization, automated testing & debugging. The AI impact on software development is tangible and measurable – 82% of respondents reported at least a 20% productivity boost; 25% reported over 50%.

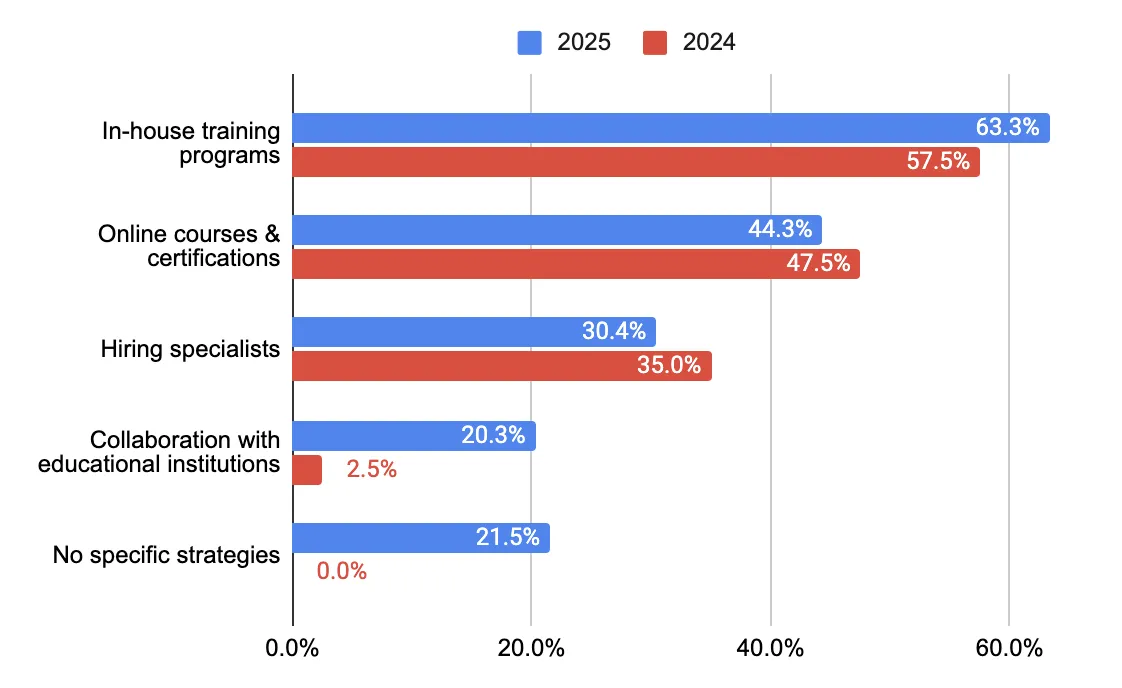

- Companies are building in-house AI expertise instead of relying on third-party tools or providers. 63.3% of respondents nurture the skills of their employees through in-house training programs.

- AI maturity brings challenges: transparency, ethics, and data privacy have become significant concerns. The question is no longer if artificial intelligence should be used, but how to govern and sustain it responsibly.

2025 marks a shift. The industry has moved from adopting artificial intelligence to being accountable for it.

Respondent Profile

Demographics

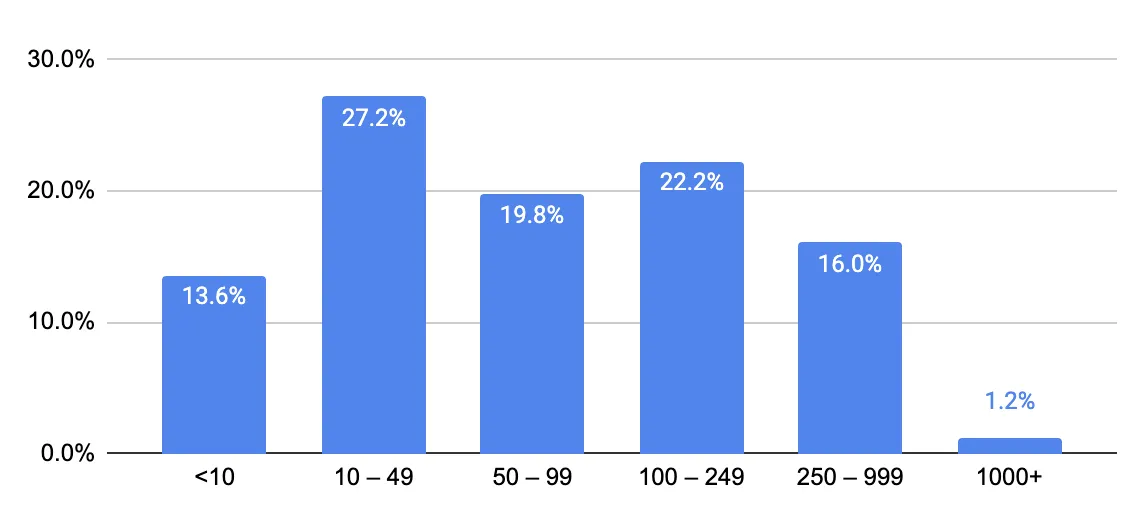

The majority of survey respondents represent small to mid-sized software development companies. Specifically:

- Over 80% of respondents work at companies with fewer than 250 employees.

- The largest segment (27.2%) consists of small businesses with 10–49 employees.

- Only 1.2% of responses came from large enterprises with more than 1000 employees.

The company size distribution reflects the presence of small- to medium-sized companies that embrace innovations and are inclined to implement new technologies quickly.

Respondent Roles

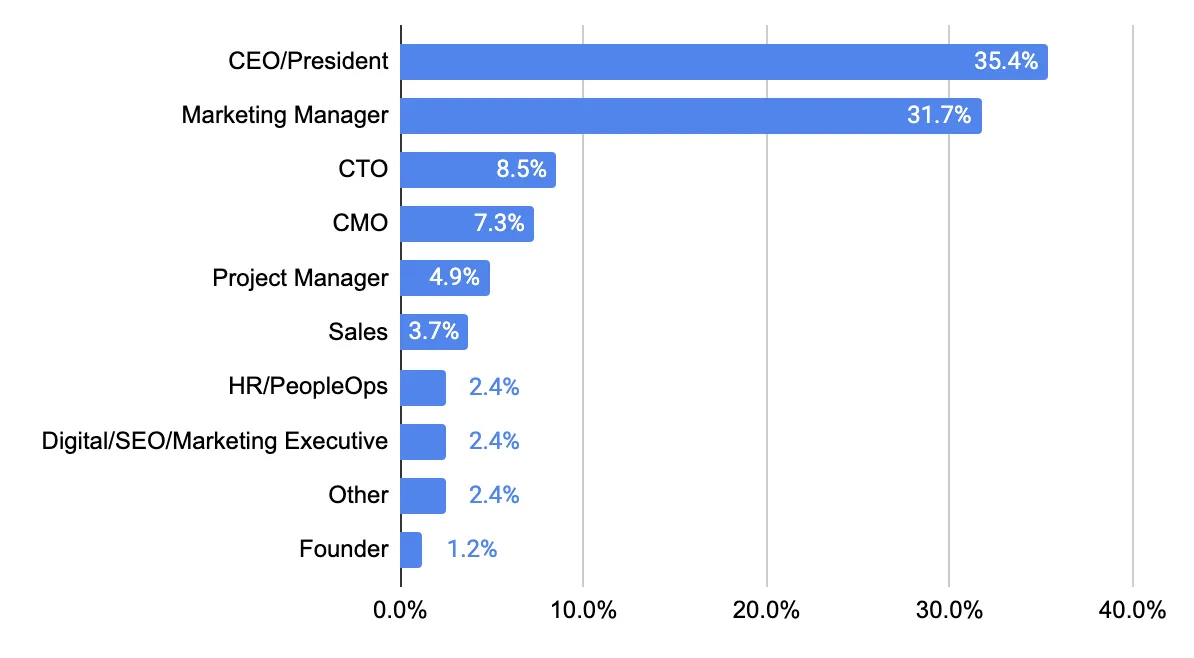

The survey reached a broad mix of roles in software development companies, including executives, leadership, strategies, and more:

- Nearly 67% of respondents hold executive-level roles, including CEOs or Presidents (35.4%) and Marketing Managers (31.7%).

- Technical leadership was represented through CTOs (8.5%) and Project Managers (4.9%).

Additional respondent roles are CMOs (7.3%), Sales (3.7%), HR (2.4%), Digital/SEO/Marketing executives (2.4%), founders (1.2%) and 2.4% for roles that didn't fit into standard categories.

This breakdown clearly depicts that Artificial Intelligence adoption concerns all roles in the organization, not only the engineering teams.

Office Location

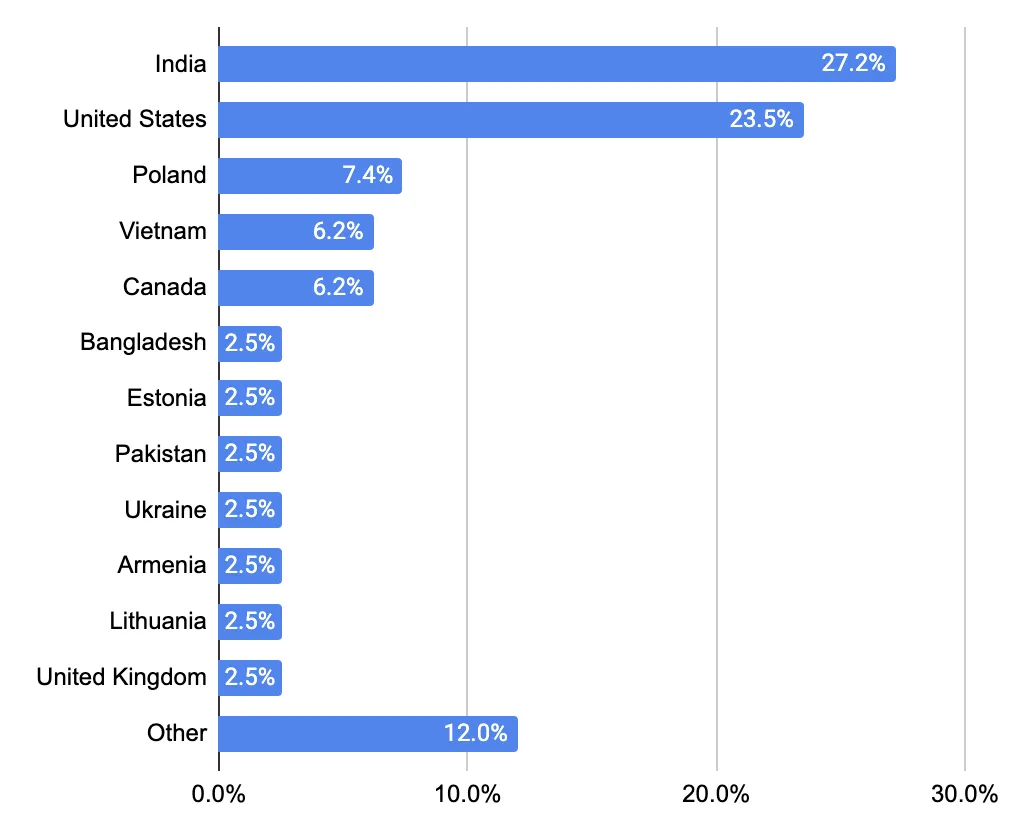

Respondents came from a wide geographic range, with the majority headquartered in several tech hubs:

- India (27.2%) and the United States (23.5%) together account for over half of all responses.

- Other notable contributors include Poland (7.4%), Vietnam (6.2%), and Canada (6.2%).

- Bangladesh, Estonia, Pakistan, Ukraine, Armenia, Lithuania, and the United Kingdom each made up around 2.5% of the total.

An additional 12% of participants, with a few respondents from each region, include: Zimbabwe, Hungary, China, Portugal, Croatia, North Macedonia, Macedonia, Spain, Brazil, Cyprus, Hong Kong, and the Netherlands.

This diverse participation highlights the global adoption of Artificial Intelligence in software development, extending far beyond the traditional tech centers.

Artificial Intelligence Adoption: Where Are We Now?

Key Insights:

- 97.5% of companies have adopted artificial intelligence in software engineering, up from 90.9% in 2024.

- Artificial Intelligence adoption is approaching industry-wide saturation.

- 2.5% of non-adopters cite cost, integration complexity, and unclear ROI as main barriers.

Current Adoption Rate

According to the 2025 survey:

- 97.5% of companies report that they are currently integrating AI technologies in software development processes.

- Only 2.5% of respondents have not yet adopted AI.

In comparison, the 2024 survey showed that 90.9% of companies had already adopted AI, while 9.1% had not. This year-over-year shift indicates a clear trend: AI is transitioning to near-complete industry saturation.

Reasons for Not Implementing AI

Among the small minority (2.5%) of companies that have not yet adopted AI, several key barriers were identified:

- High implementation costs or a lack of financial resources to start implementing AI for software development.

- The complexity of integrating artificial intelligence with existing systems or workflows (mentioned twice, indicating a recurring concern).

- No clear business need or uncertain benefits from Artificial Intelligence adoption.

These challenges suggest that for the remaining holdouts, the hesitation is not about skepticism toward artificial intelligence itself but rather about feasibility, compatibility, and ROI.

Future Plans for Artificial Intelligence Adoption

Among those 2.5% who have not yet adopted AI:

- 50% of companies have no intention of integrating AI in software engineering in the foreseeable future.

- 50% indicated a timeline of 12+ months, suggesting a slower, more cautious approach.

This signals that while artificial intelligence is nearing full adoption, a small segment of companies remain unconvinced of the value of AI for software engineering or just lack the resources to implement it.

AI in Action: Use Cases, Implementation Approaches, Goals, Results

Key Insights:

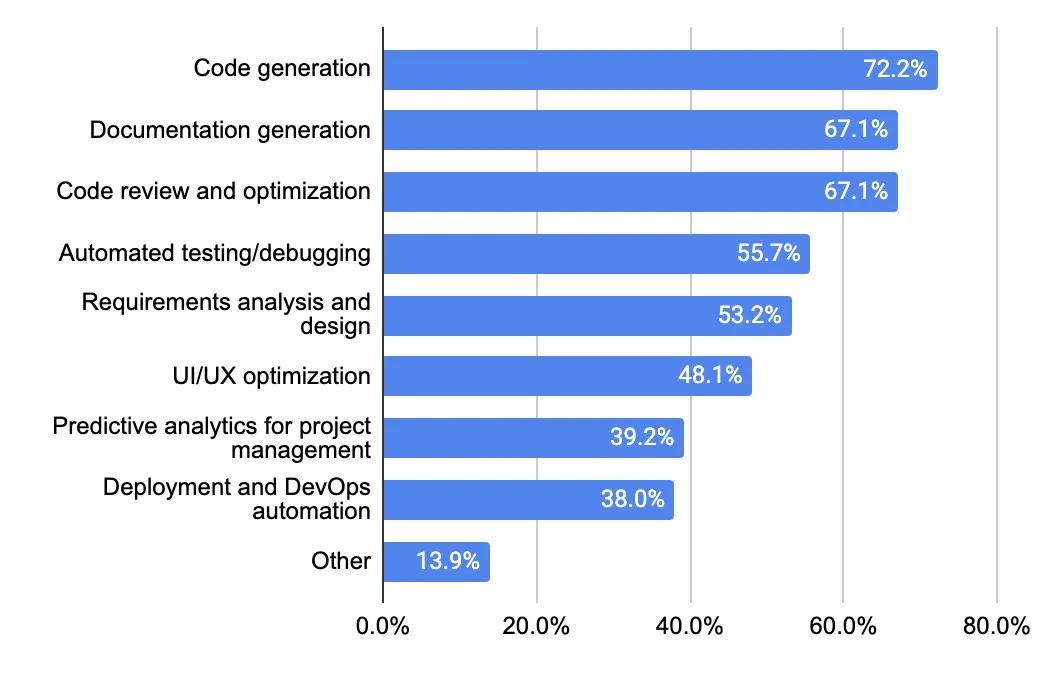

- AI is now core to the SDLC: 72.2% use it for code generation, 67.1% for documentation and review.

- Adoption is moving upstream: 53.2% use AI in requirements analysis and 48.1% in UI/UX, marking a shift from execution to planning.

- New mainstream areas in 2025: DevOps automation (38.0%), code review, and predictive analytics. AI now supports full-cycle delivery.

- 13.9% report custom use cases: From architecture to marketing, the industry shows growing creativity beyond developer tooling.

- Maturity is rising: 49.4% have used artificial intelligence for more than 1 year (vs. 32.5% in 2024); only 2.5% are new adopters.

- Impact is real: 92.4% report positive SDLC effects; 82.3% gained ≥20% productivity, 24.1% exceeded 50%.

Areas of Utilization

AI is being applied across nearly every stage of the software development lifecycle, from planning to deployment. The 2025 survey reveals a clear hierarchy of use cases, with certain areas showing particularly high adoption:

- Code generation: leads the list, with 72.2% of companies using artificial intelligence to assist or automate code writing.

- Documentation generation (67.1%) and code review and optimization (67.1%): follow closely, highlighting the artificial intelligence role in producing comprehensive documentation and code quality.

- Automated testing and debugging: is used by 55.7% of companies, showing AI's role in improving software reliability.

- Requirements analysis and design (53.2%) and UI/UX optimization (48.1%): show that artificial intelligence is extending into earlier and more subjective stages of the development process.

- Predictive analytics for project management (39.2%) and deployment/DevOps automation (38%) round out the top categories.

In addition to the major categories, 13.9% of respondents mentioned niche or company-specific use cases grouped under "Other." These included:

- Architectural design.

- DevOps pipelines and CI/CD.

- Bug detection and fixing.

- Voice interfaces and AI-powered design tools.

- Security enhancement.

- Refactoring and optimization.

- Project management and scoping.

- Client communication.

- Marketing.

- ATS integration.

- AI project development is a core service.

These responses reflect the growing creativity in how artificial intelligence is being applied, moving beyond developer tooling into broader business processes and product layers.

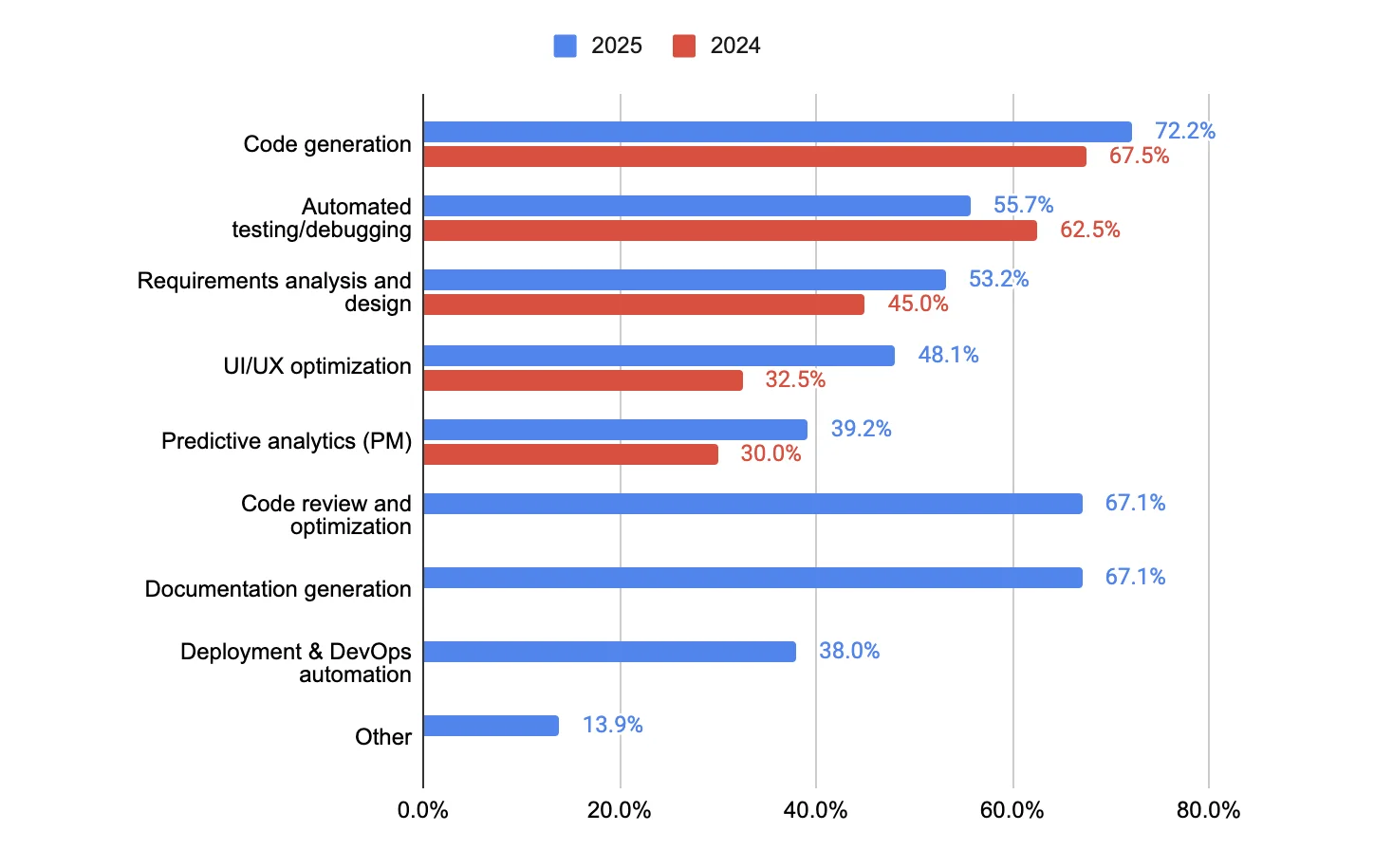

Areas of Utilization 2025 vs 2024 Comparison

AI is now deeply embedded across multiple stages of the software development lifecycle. According to the 2025 survey, the top areas where companies apply artificial intelligence include:

Key Shifts Since 2024

- Code generation remains the leading application, with usage rising from 67.5% to 72.2%.

- Automated testing/debugging slightly declined in relative share (from 62.5% to 55.7%), possibly due to the diversification of use cases.

- Requirements analysis, UI/UX optimization, and predictive analytics showed notable growth.

- Documentation generation and code review/optimization emerged as new high-ranking use cases in 2025, absent from last year's top list.

- The appearance of Deployment and DevOps automation in 2025 reflects AI's increasing impact on operations, not just development.

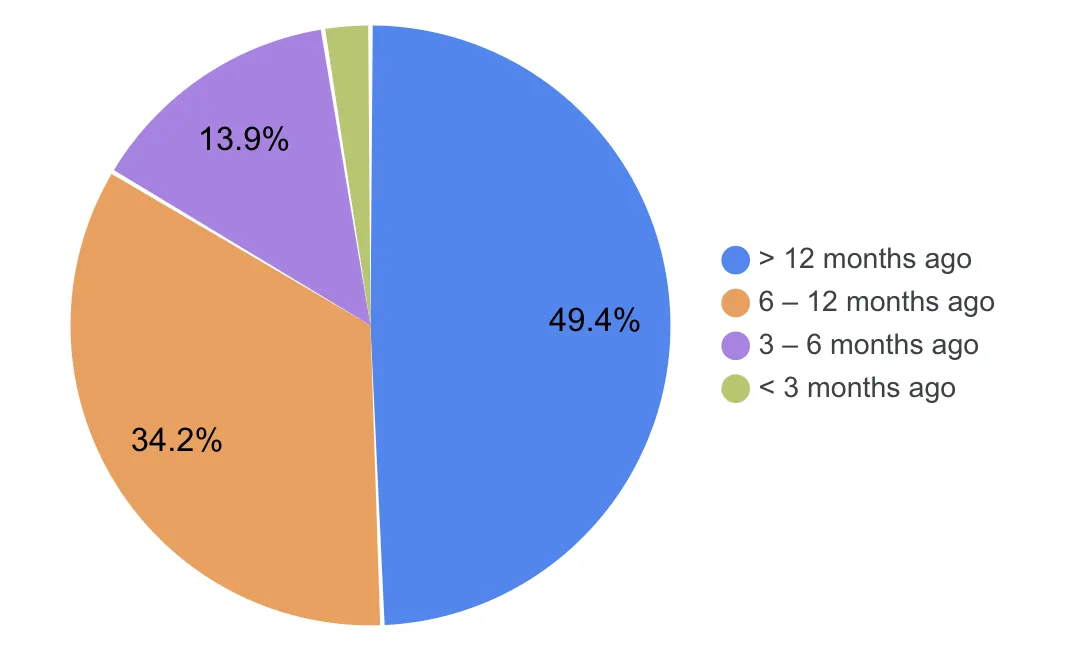

Maturity and Timeline of Implementation

- Nearly half of the companies (49.4%) have been using AI tools in software development for over a year.

- 34.2% started integrating artificial intelligence 6–12 months ago.

- 13.9% began 3–6 months ago.

- Only 2.5% are new adopters, having started within the last 3 months.

Maturity and Timeline 2025 vs 2024

In 2024, only 32.5% of companies had used artificial intelligence for over a year. This figure has jumped to 49.4% in 2025. Meanwhile, the proportion of recent adopters has declined, especially in the <3 Months category (from 7.5% in 2024 to 2.5% in 2025).

We can claim that nowadays, AI and software engineering are strongly intertwined. This shift reflects a maturing ecosystem: more companies are moving from experimentation to long-term artificial intelligence integration.

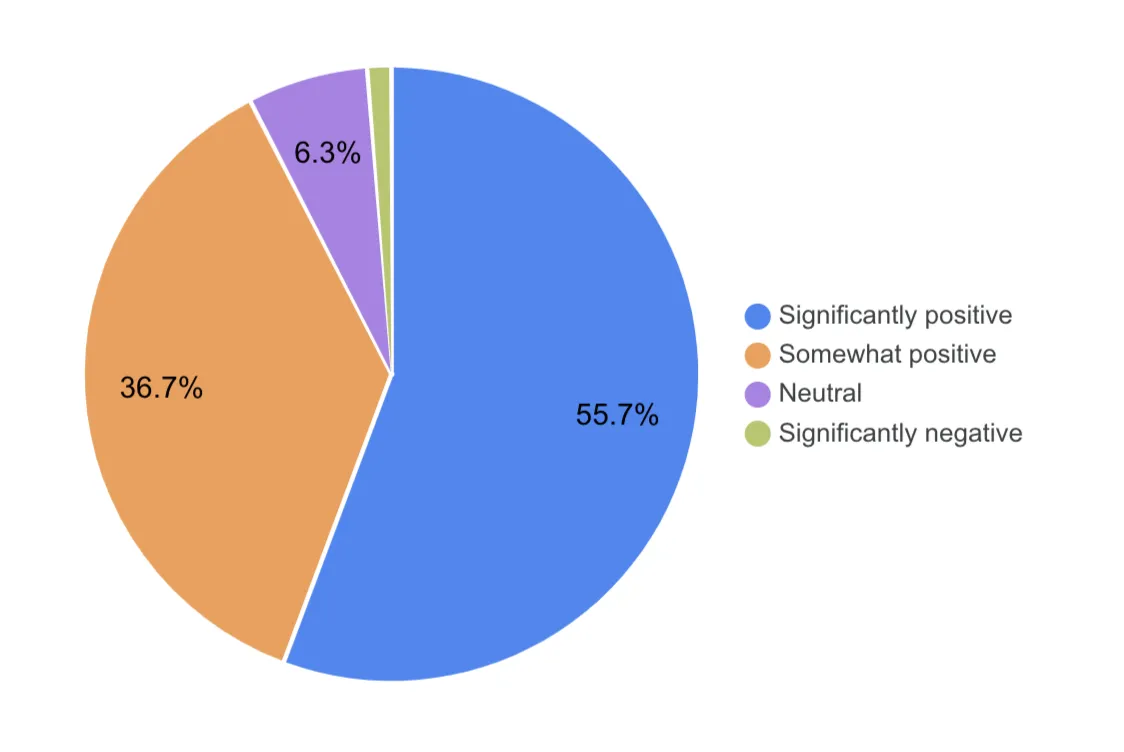

Perceived AI Impact on Software Development Life Cycle (SDLC)

In 2025, the vast majority of respondents view artificial intelligence as a net positive in the software development lifecycle:

- 55.7% describe the impact as significantly positive

- 36.7% as somewhat positive

- Only 6.3% were neutral, and 1.3% saw a negative impact

Compared to 2024, the perception has become more optimistic. A year earlier, only 40% of companies reported a significant improvement, and 17.5% were unsure of the impact.

Measured Productivity Gains

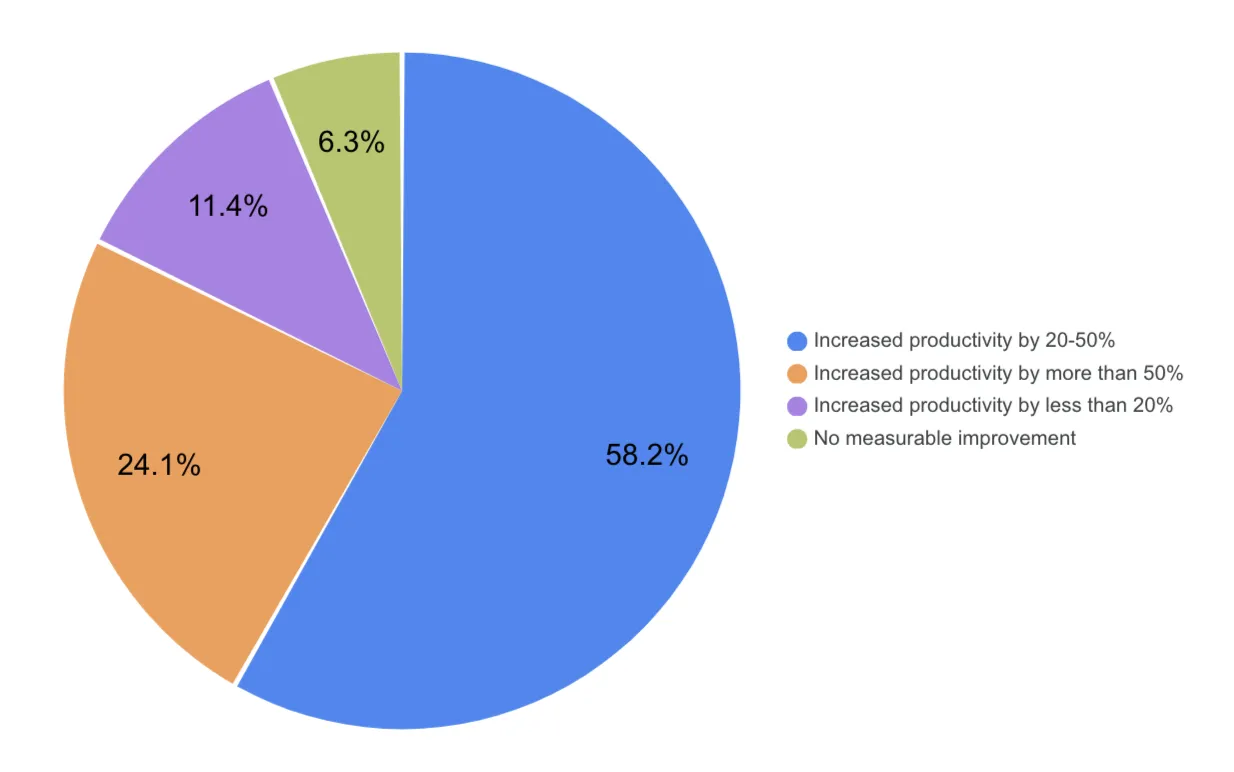

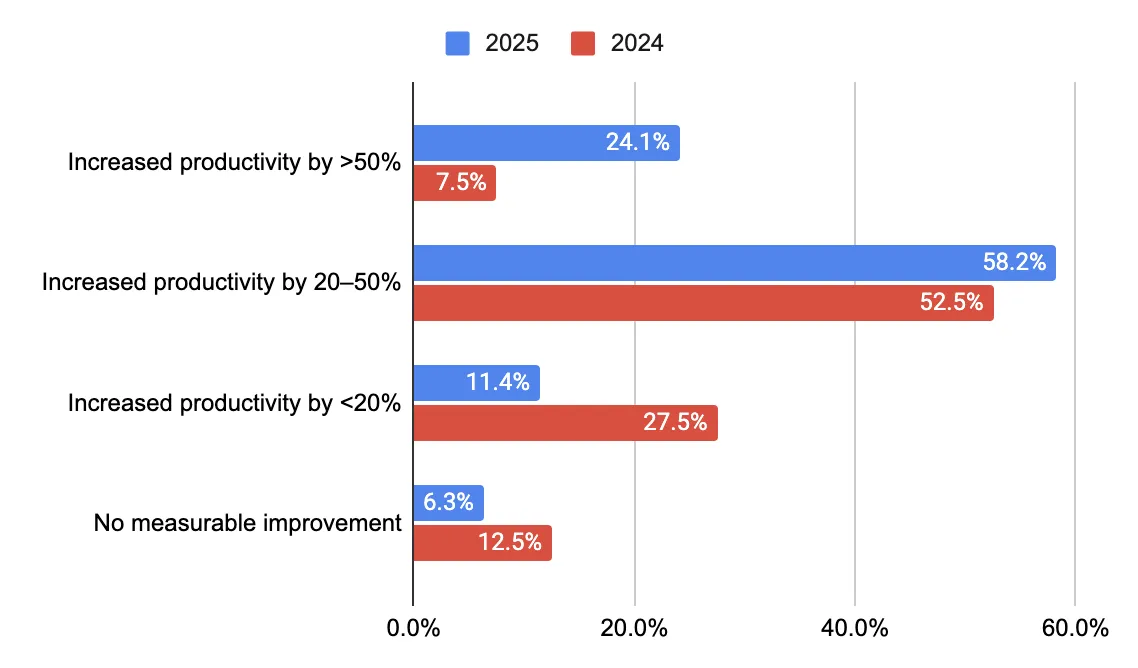

When asked about actual productivity improvements in 2025:

- 58.2% of companies reported a 20–50% productivity increase.

- 24.1% reported an increase of more than 50%.

- 11.4% saw an improvement of less than 20%.

- 6.3% observed no measurable improvement.

This marks a clear upward shift in the benefits of AI in software development from 2024, where:

- 52.5% reported a 20–50% gain.

- Only 7.5% had improvements above 50%.

- 27.5% had minor gains under 20%.

Taken together, the 2025 data shows that both perceptions of AI's value are improving, and the tangible benefits of AI in software development have become more evident. The measurable productivity gains are becoming more significant and widespread.

AI Talent and Skill Landscape

Key insights:

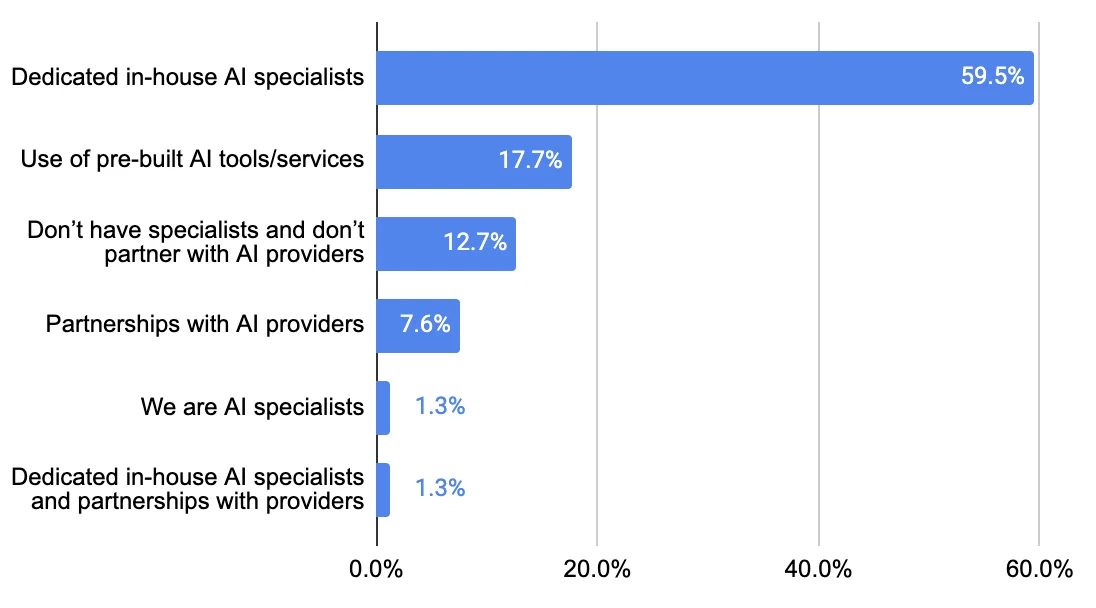

- In 2025, 59.5% of companies rely on in-house AI specialists, highlighting a strong tendency toward internal Artificial Intelligence development team building.

- Dependence on pre-built AI tools dropped to 17.7%, signaling a reduced appetite for off-the-shelf solutions.

- Despite progress, 12.7% of companies still lack both in-house expertise and external support, pointing to resource gaps.

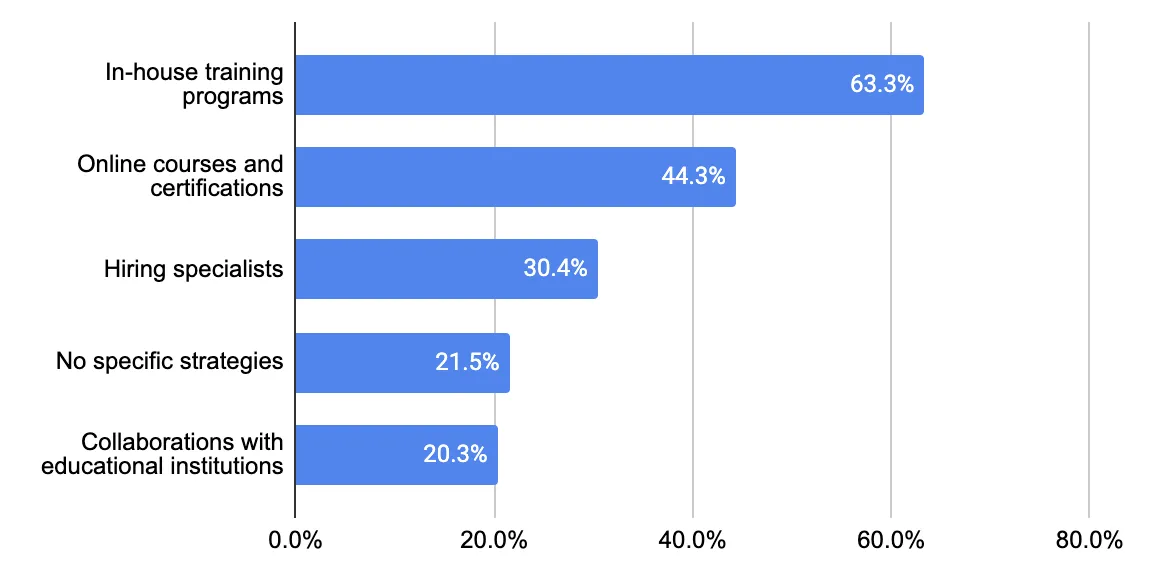

- The most common training strategy is in-house programs (63.3%), followed by online courses and external hiring, but nearly 1 in 5 companies still have no defined upskilling strategy.

- Collaborations with universities have grown from 2.5% to 20.3%, reflecting the early stages of industry-academia alignment on AI.

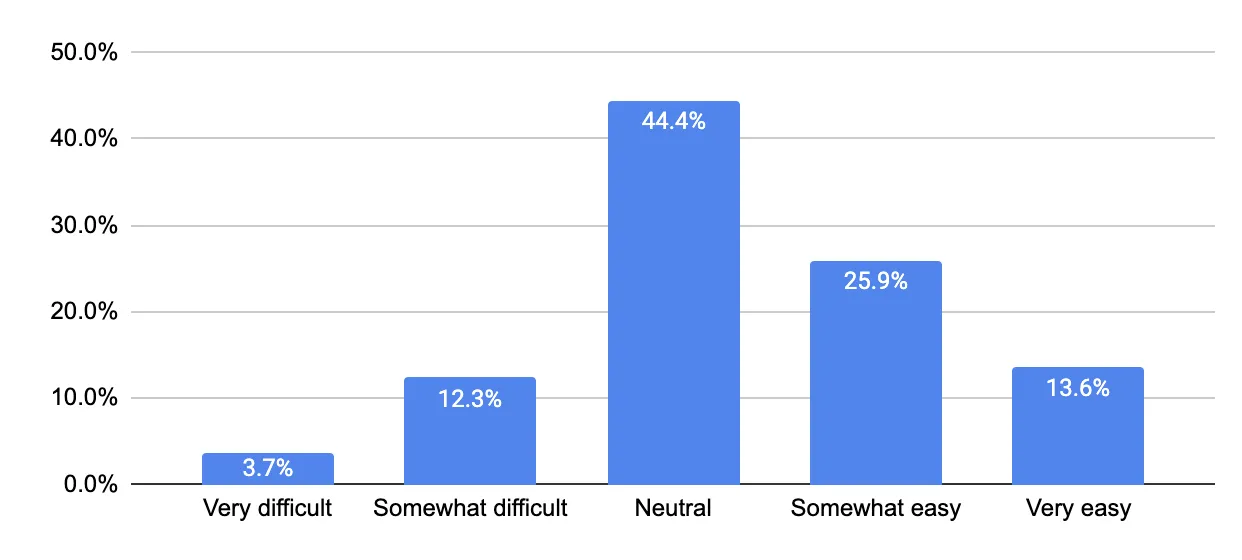

- The majority, 83.9% of companies, rate talent acquisition as very easy or neutral, and only 16.1% find it difficult, indicating that talent scarcity is no longer a universal bottleneck.

- The focus shifted from "Will AI replace software engineers?" to "How can junior developers get experience in the era of Artificial Intelligence?".

Talent and Artificial Intelligence Expertise

In 2025, most companies prioritize internal capability building when it comes to AI:

- 59.5% have dedicated in-house Artificial Intelligence specialists: this indicates a strong focus on nurturing the internal expertise rather than referring to third-party tools and providers.

- 17.7% use pre-built AI tools and services: the second place, with a serious lag from the growing internal expertise.

- 12.7% report having no in-house expertise or third-party partnerships: these are likely early-stage adopters or companies with serious resource constraints.

- 7.6% partner with external Artificial Intelligence providers.

- 1.3% identify as Artificial Intelligence providers themselves or combine internal teams with external partners.

This distribution shows a clear preference for building in-house expertise, while a significant minority still depends on external tools or services.

Talent and Artificial Intelligence Expertise 2025 vs 2024: Shifting Patterns

The data reveals a clear shift from external reliance to internal capability building. In 2024, 30% of companies used pre-built AI tools, and 45% had in-house AI specialists. In 2025, reliance on tools dropped to 17.7%, while internal specialists rose to 59.5%. This trend suggests a growing demand for customization, control over core capabilities, and long-term scalability.

Yet the gap between ambition and available talent persists. Despite the strategic move inward, finding and retaining AI-skilled professionals remains a challenge, reinforcing the need for sustained investment in training, recruitment, and talent partnerships.

Training Strategies for Skill Development in 2025

To support AI integration, companies are actively investing in their developers' skills through a variety of approaches:

- 63.3% offer in-house training programs, making this the most common strategy. We see these numbers as very pragmatic since in-house programs allow tailored instruction aligned with company-specific tools, workflows, and use cases.

- 44.3% use online courses and certifications: leveraging platforms like Coursera and Udemy as a way to develop their in-house expertise of AI in programming.

- 30.4% focus on hiring specialists: bringing in external Artificial Intelligence talent instead of reskilling internal teams and hunting talented developers is a standard practice in the current Artificial Intelligence landscape.

- 21.5% admitted to having no specific strategy.

- 20.3% collaborate with educational institutions: a method that has grown significantly, especially among enterprise-level companies.

Training Strategies: 2025 vs 2024 Comparison

In-house training became even more widespread in 2025, showing that companies increasingly view long-term upskilling as a strategic investment. The rise of collaborations with educational institutions (from 2.5% to 20.3%) suggests stronger ties between industry and academia, possibly to address talent shortages more sustainably.

Online certification use remained high but dipped slightly, perhaps due to a shift toward internal and formalized programs. The decline in hiring external specialists may reflect market saturation, rising costs, or a preference for internal development.

A new category, companies with no clear strategy, highlights that while Artificial Intelligence adoption is nearly universal, skill development efforts are not yet uniform.

Talent Availability: Is It Hard to Hire AI-Ready Developers?

Despite AI's growing presence in software development, the hiring landscape is not as dire as expected. In fact, the 2025 survey shows that for many companies, finding developers with AI expertise is manageable or even easy.

- 44.4% of companies rated the process as neutral, indicating that finding a skilled developer isn't a major roadblock.

- 39.5% found it somewhat or very easy: probably due to a flat learning curve of AI in programming, the presence of AI-related education, or just the overall market state, but a major part of respondents don't see any challenges in hiring AI developers.

- Only 16.1% of respondents described hiring as somewhat or very difficult: a relatively low number considering the field's rapid evolution.

This distribution reflects that talent shortages aren't an acute question for the majority of development providers. Today, as more developers gain exposure to AI tools (e.g., GitHub Copilot, TensorFlow, OpenAI APIs) and as companies refine what "AI expertise" actually means, the pressure on hiring has eased.

Still, the neutral middle suggests caution. Many companies may be adapting expectations, relying more on internal upskilling or hybrid profiles rather than seeking full-stack AI specialists.

Objectives and Challenges of Artificial Intelligence Adoption

Key Insights:

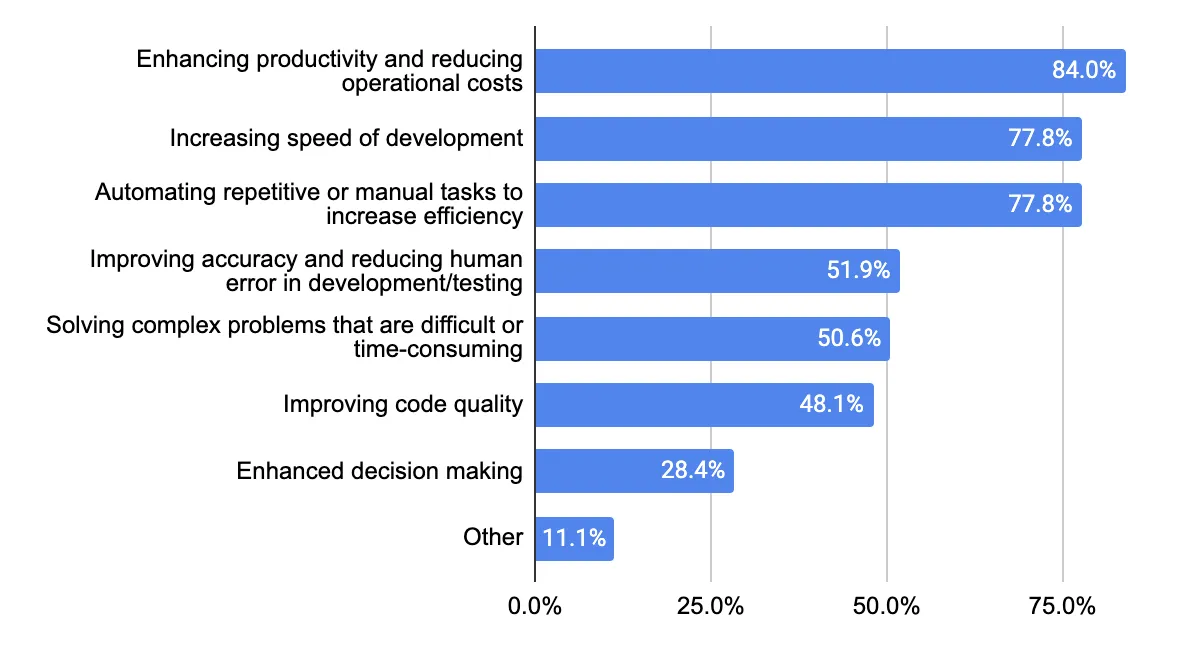

- 84% of companies adopt AI to boost productivity and cut costs.

- 77.8% seek to accelerate delivery and reduce manual work.

- Over 50% use AI to improve quality, reduce errors, and solve complex tasks.

- Strategic use is growing: 28.4% apply AI for decision-making.

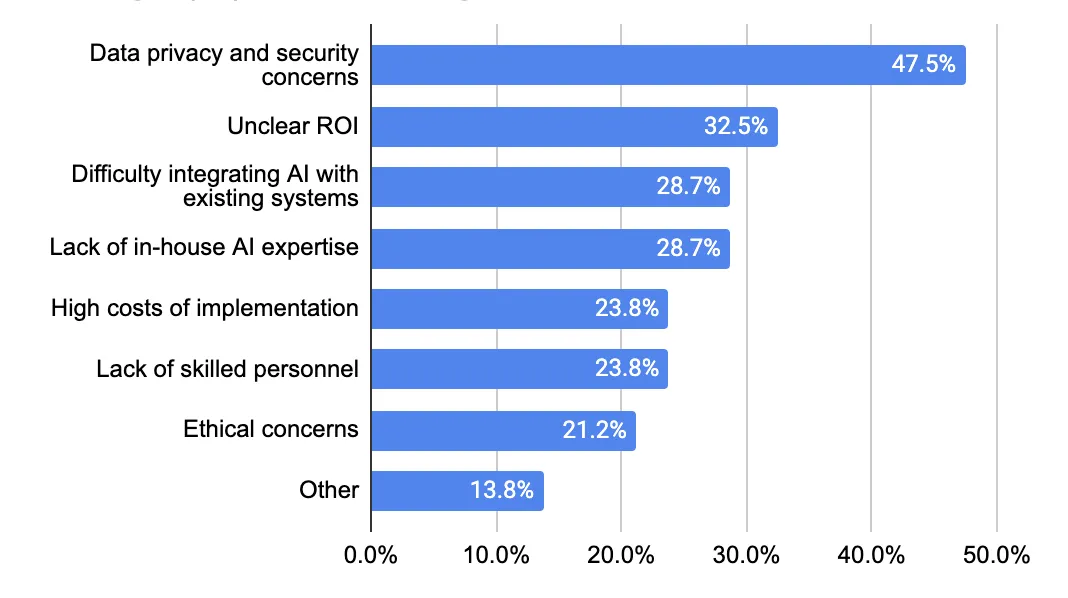

- The top barrier is data privacy and security (47.5%).

- Other common challenges include unclear ROI, integration issues, and a lack of in-house expertise.

- Ethical concerns and organizational resistance slow adoption, even when technical capacity exists.

Objectives of Artificial Intelligence Adoption

The respondent's answers indicate that companies have clearly defined goals and objectives when it comes to AI implementation:

Top Priorities: Effectiveness and Speed

- The most cited objective, and the most evident one, is enhancing productivity and reducing operational costs. 84% of respondents selected this as a primary reason for deep adoption of AI technology. The goal here is simple: to do more with less effort.

- Close behind, 77.8% of companies aim to increase development speed, and another 77.8% prioritize automating repetitive or manual tasks. The speed matters a lot in the modern age, and the capability to accelerate software delivery cycles is extremely valuable.

For companies, these goals result in the following: getting more software without increasing the dev teams.

Secondary Goals: Quality and Accuracy

- 51.9% of companies use AI to improve accuracy and reduce human error in development and testing.

- 50.6% turn to AI to solve complex or time-consuming problems, which goes beyond automation into the sphere of solving complex challenges.

- 48.1% see value in improving code quality, with standard methods such as AI-driven code review and refactoring automation.

Strategic Use: Decision Support and More

- 28.4% use AI for enhanced decision-making, such as through predictive analytics, risk modeling, or real-time insights.

- A small group (11.1%) listed "Other" objectives, including the development of client-facing AI features or differentiating their product offering using the advanced analytics the technology brings.

That's quite valuable results because it looks like companies are looking for tangible, specific results and measurable goals. The technology is not longer adopted because it's trending, businesses understand the value behind using it.

Challenges & Barriers to AI Integration

Despite the high adoption rate of AI in software development, the challenges follow. The most common challenges cited by survey participants include:

- Data privacy and security concerns (47.5%): this remains the top issue. AI tools have to deal with sensitive business data, which makes companies cautious about possible data leaks or inappropriate usage of their data.

- Unclear return on investment (32.5%): for many teams, especially in smaller companies, it's a tough task to quantify and measure the results of Artificial Intelligence adoption.

- Integration with existing systems (28.7%): legacy infrastructure is another tough challenge for well-established businesses since not all legacy software supports modern tech stacks.

- Lack of in-house expertise (28.7%): many teams struggle to build or access the necessary technical skills.

- High implementation costs (23.8%) and shortage of skilled personnel (23.8%).

- Ethical concerns (21.2%): some companies hesitate to reap the benefits of artificial intelligence because of ethical concerns, probably including fairness, accountability of such advanced tools, or long-term impact on their businesses and teams.

In open comments, respondents revealed more nuanced and, at times, philosophical challenges:

- Over-reliance on AI degrades the critical thinking of junior developers.

- Cultural resistance or uncertainty around who should "own" AI in cross-functional teams.

- Low confidence in AI-generated output, leading to additional rounds of refactoring.

- Time constraints occur when teams are too busy with core tasks to explore new AI tools.

- Resource limitations. Some can't afford specialists or doubt the return from outsourcing.

- Misalignment with the current workload. Companies don't have enough relevant AI-scale tasks to justify dedicated hires.

These responses suggest that some barriers to AI are also organizational and cognitive. AI changes not only how teams work but also how they think and prioritize.

Legal, Ethical & Regulatory Considerations

Key Insights:

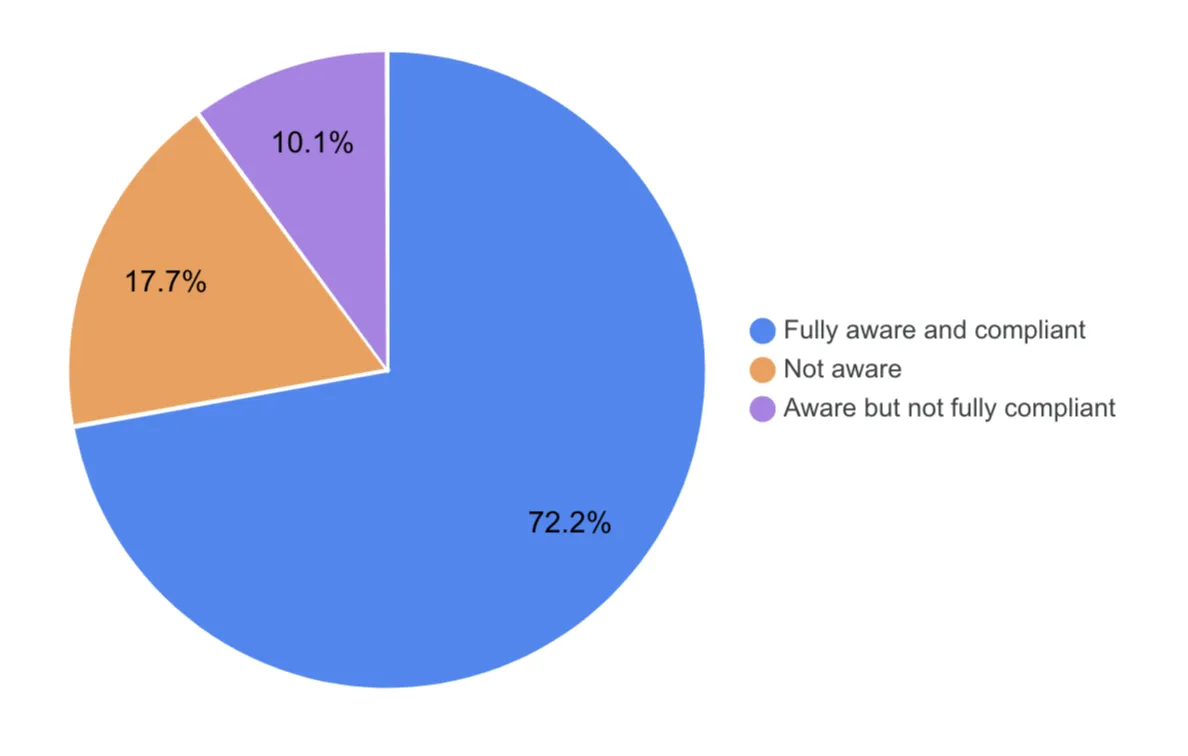

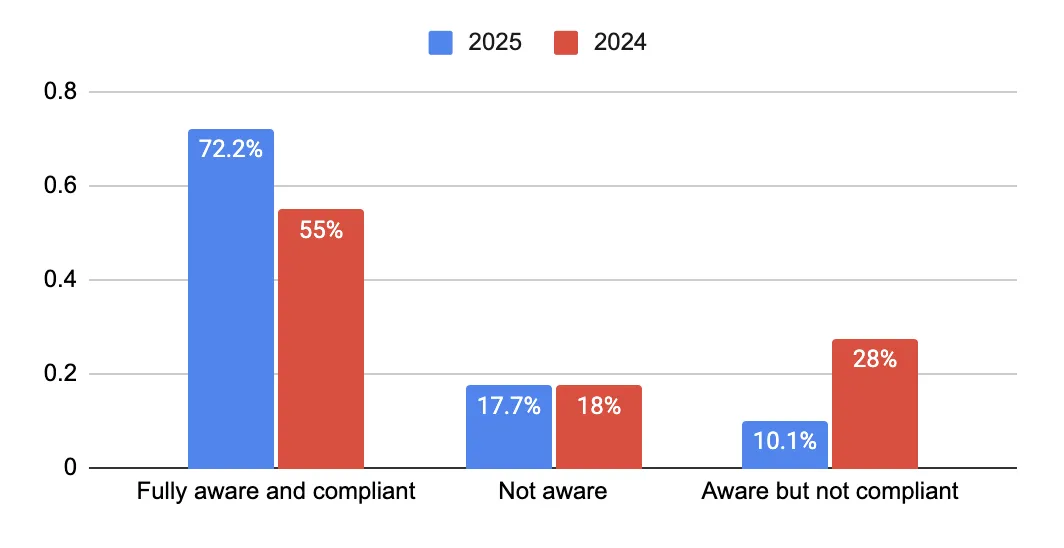

- 72.2% of companies are now fully aware and compliant with artificial intelligence regulations, up from 55% in 2024.

- Non-compliance dropped: only 10.1% are aware but not compliant, vs 27.5% last year.

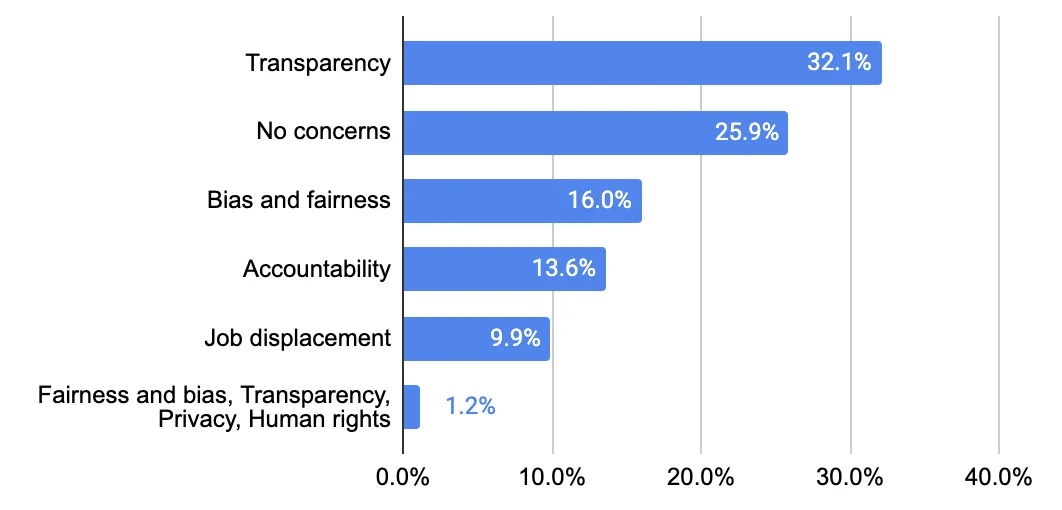

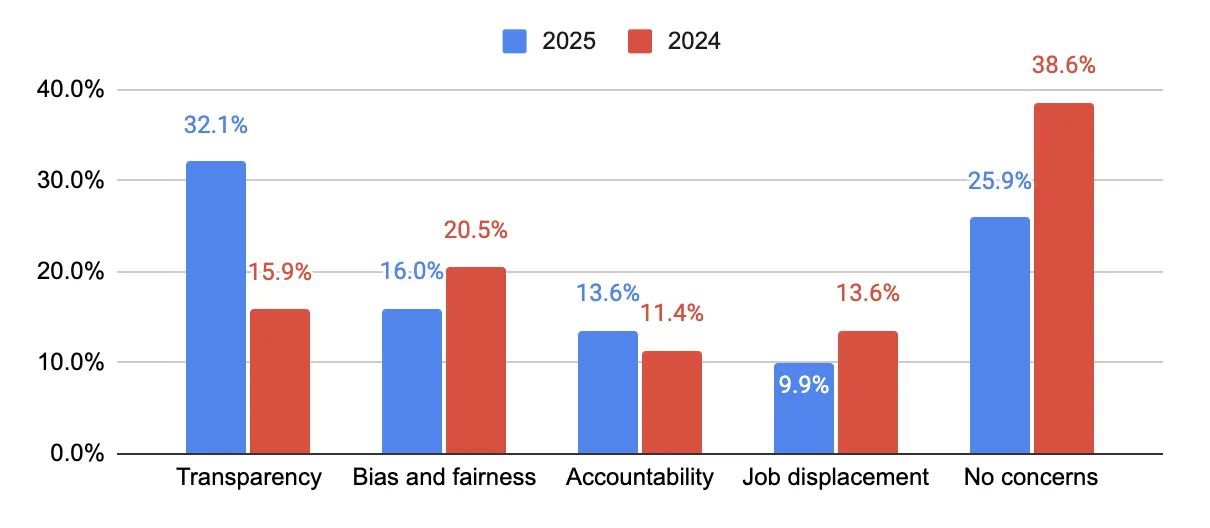

- Ethical awareness is rising: 32.1% cite transparency as the top concern, more than doubling since 2024.

- The share of companies reporting no ethical concerns fell from 38.6% to 25.9%, indicating growing responsibility.

- Common strategies include internal codes of ethics, transparency measures, and cross-functional review, even if not always formalized.

- The data signals a shift from awareness to structured action as companies align artificial intelligence use with legal and ethical expectations.

Legal and Compliance Awareness

As artificial intelligence technologies continue to evolve, legal and regulatory awareness is becoming more widespread and more structured. In 2025:

- 72.2% of respondents report being fully aware and compliant with relevant artificial intelligence regulations.

- 17.7% are not aware of any legal requirements.

- 10.1% are aware but not yet compliant.

Legal and Compliance Awareness 2025 vs 2024

In 2025, 72.2% of companies report being fully aware and compliant with artificial intelligence regulations, up from 55% in 2024. At the same time, the share of those aware but not compliant dropped from 27.5% to 10.1%.

This shift reflects a clear move toward regulatory maturity as more companies turn awareness into action. While the number of completely unaware respondents remains steady (~17%), the industry overall is taking legal responsibility more seriously than it did a year ago.

Ethical Concerns

Companies were also asked about ethical issues that were considered during artificial intelligence implementation. In 2025:

- Transparency is the top concern, cited by 32.1%.

- Bias and fairness come next at 16.0%.

- Accountability: 13.6%.

- Job displacement: 9.9%.

- A quarter (25.9%) report no ethical concerns.

- A few responses reflected the desire for multi-select options and broader frameworks (e.g., human rights, privacy).

Ethical Concerns 2025 vs 2024

In 2025, transparency emerged as the leading ethical concern, cited by 32.1% of companies, more than doubling compared to 2024 (15.9%). This signals a growing focus on trust and explainability in artificial intelligence systems.

At the same time, the share of companies reporting no ethical concerns dropped from 38.6% to 25.9%, suggesting that ethical awareness is becoming more widespread and taken more seriously across the industry.

Mitigation Strategies

To address the ethical and legal risks of Artificial Intelligence adoption, companies are employing a range of mitigation strategies, either formally or implicitly, through their actions. These strategies include:

- Establishing internal codes of ethics that guide artificial intelligence development practices.

- Increasing transparency in model behavior, data use, and decision processes.

- Diversity-focused data practices to reduce bias.

- Employee education about artificial intelligence implications and risks.

- Cross-functional review processes to ensure accountability.

Although these strategies weren't directly listed as a question in the survey, the high rate of compliance and rising attention to ethics strongly suggest that companies are moving toward more structured, proactive approaches to risk management.

Shifting Mindsets and Maturity

The 2025 data reflects a meaningful shift in mindset compared to the previous year. In 2024, many companies were still in exploratory stages - aware of risks but often lacking the structure to address them. By 2025, there is:

- A clear reduction in non-compliance.

- A drop in ethical indifference.

- And a doubling of transparency awareness, signaling deeper engagement.

This points to an industry maturing in its ethical frameworks, governance practices, and readiness for public scrutiny. As Artificial Intelligence adoption becomes ubiquitous, trust, explainability, and responsibility are becoming as important as performance.

Outlook for the Next 3-5 Years

Key insights:

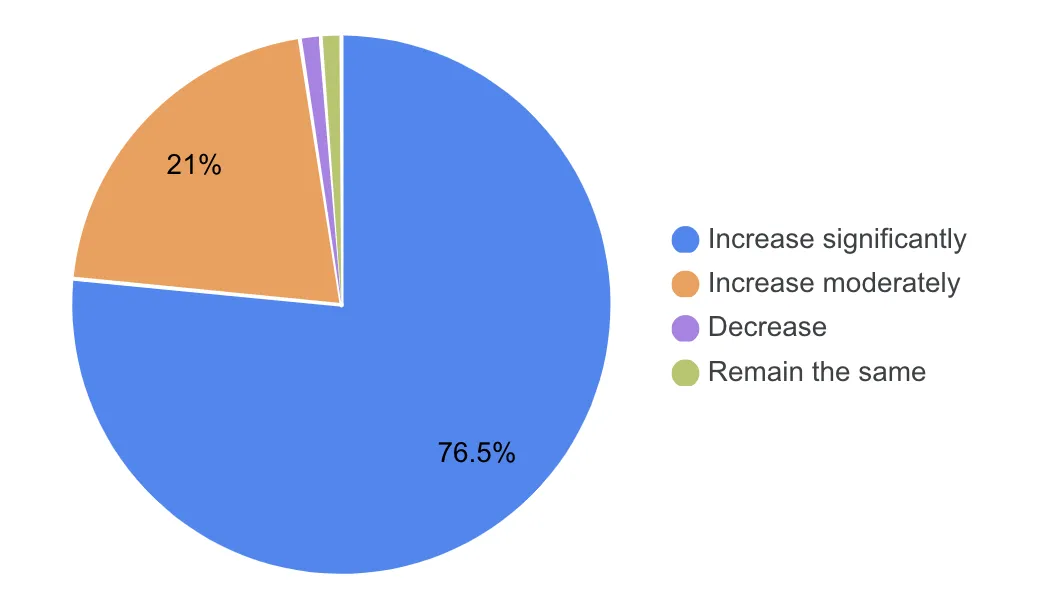

- 76.5% expect the role of Artificial Intelligence in software development to grow significantly (up from 70.5% in 2024).

- Agentic systems are emerging and are used for debugging, planning, and DevOps automation.

- Companies are exploring agentic artificial intelligence for debugging, sprint planning, and workflow automation across QA, DevOps, and PM.

- Interest is rising in multimodal and on-device artificial intelligence (text, image, voice, video) for real-time and privacy-focused applications.

- Top concerns: skill loss, code quality, data privacy, over-reliance on AI, and junior role displacement.

How Respondents See The Role of AI

In 2025, 76.5% of respondents believe AI's role in software development will increase significantly, up from 70.5% in 2024. Another 21% expect a moderate increase, while only 2.5% foresee no growth or decline.

The trend is clear: confidence in AI's expanding role is growing. As in 2024, companies anticipate more automation, deeper integration into workflows, and a shift in developer skill sets to match this new reality.

Anticipated Trends in the Coming 5 Years

We have an open question asking respondents to share specific artificial intelligence technologies or trends they are particularly excited about or planning to adopt. These responses allow us to shape the trend landscape for the coming years. We grouped these trends for simplicity. They are:

Trend #1: The Rising Role of Generative AI

Mentions from respondents: GPT-4(o), Claude, Copilot, RAG, NL2Design, Sora, Diffusion models.

The most frequently cited trend is generative AI. Many respondents noted GitHub Copilot as already in use, while others referenced LLMs for:

- Automated code generation.

- Content and design creation.

- Smart assistants and copilots.

- Chatbot development.

Trend #2: Agentic AI

Mentions from respondents: agentic workflows, agentic systems, autonomous project agents.

A growing number of companies are exploring AI agents. AI agents are autonomous systems capable of:

- Debugging and sprint planning.

- Workflow automation.

- Cross-functional support across QA, DevOps, and PM

This suggests interest is moving beyond passive assistants to proactive decision-making systems and goal-oriented AI.

Trend #3: Multimodal & Real-Time AI

Mentions from respondents: multimodal AI, on-device AI, voice AI.

Tools that combine text, image, audio, and video understanding are on the rise, allowing developers to build richer, context-aware applications. Edge AI and on-device intelligence are also gaining traction for latency-sensitive, privacy-first deployments.

Trend #4: AI for Testing, QA, and DevOps (AIOps)

Mentions from respondents: test automation, defect prediction, AI-powered pipelines.

As mentioned, some respondents have already leveraged artificial intelligence for quality assurance automation.

Some of the most popular use cases are:

- Test case generation.

- Self-healing scripts.

- Intelligent bug detection.

- CI/CD optimization.

One day, quality assurance moved from manual to automated testing. Now, it's moving toward adaptive, AI-driven quality assurance.

Trend # 5. AI in Product Experience & Analytics

Mentions from respondents: behavioral prediction, personalization, CRO tools, and user analytics.

Companies are looking for ways to integrate artificial intelligence deeper into different product layers for deeper personalization, user behavior modeling, and conversion rate optimizations.

The goal here is simple: more personalized, user-centered digital products.

Trend #6. Emerging Experiments With AI Capabilities

There were fewer mentions of other ways respondents are experimenting with AI. Overall, the industry seems curious about its capabilities and is actively exploring the most effective strategies for leveraging it. Reported experiments include:

- Edge AI.

- Quantum AI.

- Digital twins.

- Human-robot collaboration.

- A-powered security.

- AI democratization.

Many respondents noted that they are currently testing tools like Copilot, voice AI, and behavior prediction. Others have already implemented full agentic workflows and on-device machine learning.

Companies are no longer just "exploring" artificial intelligence - they know exactly what they need: faster coding, reduced routine work, personalized experiences, and, above all, etc.

Risks and Concerns: What Keeps Teams Cautious About AI?

Another open question was about concerns related to Artificial Intelligence adoption. We definitely saw a strong excitement about the AI, but many companies also have concerns associated with this technology. These concerns fall into several recurring themes:

- Skill degradation & over-reliance on AI: this is the most cited concern across all responses. Teams worry that junior developers will lose foundational coding skills, problem-solving ability, and architectural thinking. There's fear of a future workforce that is too dependent on AI-generated suggestions and has little understanding of how systems work "under the hood."

"Honestly, the biggest one is people skipping the basics. I've seen developers just copy what AI gives without checking if it actually makes sense."

- Code quality and security concerns: many responses expressed concern about the reliability and security of generated code. The code might have hidden bugs, vulnerabilities, bad architectural patterns, or just take outdated or inappropriate advice from the Internet and implement it.

- Privacy, compliance & data ethics: several participants raised concerns about artificial intelligence decisions being unexplainable or privacy violations. Others pointed out that the potential use of business data for training and the lack of visibility into the way models produce the results and handle incoming data pose compliance risks, especially in sensitive industries.

- Job displacement & talent pipeline disruption: many respondents expressed concern about junior roles disappearing, making it harder to enter the industry and build experience. Artificial intelligence is seen as potentially devaluing human labor, replacing engineers before they're fully trained. So the fear of "will AI replace programmers?" is not as relevant as the problem of training new junior programmers.

A Note of Caution

Despite the optimism, previous responses in the survey highlight that this transformation must be managed carefully:

- Critical thinking must be preserved.

- Trust and transparency must be built into systems.

- Skill development must evolve alongside tooling.

In short, the next five should focus more on responsive artificial intelligence rather than on better.

Discussion

The 2025 survey established a pivotal shift in how artificial intelligence operates within the software development industry. The discussion goes beyond the initial exploration of artificial intelligence capabilities and moves toward considering Artificial Intelligence as an essential component of organizational development plans.

Adoption is nearly universal. The number of organizations using artificial intelligence has reached 97.5%, an increase from 90.9% in 2024. Today's challenges revolve more around the practical execution of AI than initial doubts regarding the necessity of its implementation. The issue now is how to overcome the complexity of integration, where to get skilled talents, and where to find investments for integration.

Usage patterns have matured. The primary use cases for artificial intelligence now focus on code generation, accompanying documentation generation, and code review. The growth of requirements analysis and UI/UX optimization shows that artificial intelligence extends its reach beyond the code-writing stage. Technology now shapes the entire process of software development, from planning and conception to delivery.

Artificial intelligence expertise is being internalized. The percentage of organizations building their AI capabilities in-house reached 59.5%, while external tool usage dropped from 30% in 2024 to 17.7% in 2025. Organizations show extended dedication to AI by building it into their permanent operational structures. The educational institution collaboration numbers show a dramatic growth from 2.5% to 20.3%, demonstrating the industry's increasing need for academic-industry collaboration.

Productivity gains are now measurable. The survey data from 2025 indicates that 82.3% of respondents experienced at least 20% productivity growth, while 25% achieved more than 50% productivity gains. The majority of companies managed to measure the results of technology integration, making it easy to justify its implementation. We expect the adoption to grow in recent years.

At the same time, concerns have grown more nuanced. Where 2024's discourse was often reactive, centered on awareness and exploration, 2025 brings reflection. Developers and leaders alike are reckoning with second-order effects: skill atrophy, over-reliance on AI output, ethical uncertainty, and the erosion of foundational competencies among junior staff. These are not fringe concerns - they are now part of mainstream strategic thinking.

Legal and ethical standards have advanced. Full compliance with AI regulations rose to 72.2% (from 55% in 2024).

The year 2025 demonstrates how organizations have transitioned from exploratory AI deployments to permanent strategic implementation. AI exists deeply within organizations, yet organizations have just started to learn how to use it responsibly. The upcoming years will demand that organizations develop better governance systems, stronger skill alignment, and ethical resilience in response to fast-paced technological advancements.

Conclusion

The 2025 findings confirm that AI in software engineering no longer has an experimental nature. Companies are not just adopting AI tools; they are reshaping workflows, building internal expertise, and measuring tangible productivity gains.

Yet this progress brings new responsibilities. As AI moves deeper into the development lifecycle, questions of trust, transparency, and long-term skill sustainability become critical. The industry is beginning to acknowledge that successful AI integration depends not only on performance but also on governance, ethics, and alignment with human judgment.

Looking ahead, the conversation will likely shift from "how to implement AI" to "how to lead with it." The next survey cycle will need to explore emerging questions: How do agentic systems affect accountability? How do we preserve developer intuition in an automated environment? And how do we ensure that AI enhances, rather than erodes, the creative and collaborative core of software development?

AI has passed the adoption threshold. The challenge now is to use it wisely.

Some of the companies that participated in the survey:

Solbeg, ZG Worldwide Consultants, LeanCode, Novumlogic Technologies, CodeAegis, Sigli, SynergyTop, Fora Soft, JetThoughts, Celadonsoft, HUSPI, Eagle IT Solutions, Evotek, NineGravity, ZAPTA Technologies, HighTech Kaunas Cluster, iborn.net, 2N Consulting Group, FlairsTech, launchOptions, Data InfoMetrix, DifferenzSystem, Yaali Bizappln Solutions, World Web Technology, AleaIT Solutions, Anadea, Wizard Labs, Swift Code Solution, BugRaptors, MoogleLabs, Simplico, Chudovo, Biz4Group, Erbis, Mallow Technologies, DesignNBuy, Raftlabs, WeblineIndia, Lantern Digital, Sysmo, Kyanon Digital, Easify Technologies, A-HR, UPlineSoft, Ideas2Goal Technologies, eCommerce Development Pros, The Apptitude, 2WinPower, Tech.us, Think201, ManekTech, WideFix, Spotverge, MetaSys Software, Jelvix, Intersog