How AI Reshaping Development Workflows in 2025

In the era of AI-driven development, Techreviewer conducts online surveys to track real-world trends in software engineering. We leverage our global network of top software development companies to assess the impact of AI on the development workflow.

Following our previous study, “AI in Software Development 2025,” we decided to delve further and analyze how AI is actually utilized by developers today and its impact on their work. The goal of this research is to understand how deeply AI has penetrated the software development process, identify the main trends shaping this transformation, and evaluate its real impact on productivity, quality, and ethics.

In this survey, we examine the AI development workflow, where AI speeds up teams, highlighting the areas where trust and code verification are still required, and what leaders should prioritize to capture value while managing risk.

Executive Summary

- AI is now routine in software development: 64% use it daily, and 20% use it weekly. ChatGPT dominates adoption at 84%, with Claude, Copilot, and Cursor each above 50%.

- Developers mainly use AI for debugging, code generation, documentation, and tests – areas with the biggest productivity gains. Highly creative or context-driven tasks, such as system design or API integration, remain mostly human-led.

- Overall satisfaction with AI tools is high. Nearly 78% of developers are satisfied, and 85% report higher productivity. Two-thirds notice better code quality, showing that AI is not only speeding up development but also improving results.

- Still, trust in AI remains cautious. Most developers verify AI-generated code manually before implementation. Only 18% are fully confident in AI accuracy, and almost all teams treat AI output as a draft rather than final code.

- Ethical and legal questions are widespread. Four out of five developers have faced at least one ethical dilemma related to AI-assisted coding. The main concerns are intellectual property, data privacy, and bias in AI-generated results. Despite broad use, governance and disclosure practices lag behind.

- In short, AI is now a permanent part of the development process. It brings measurable efficiency gains but still requires human oversight, clear policies, and responsible adoption to unlock its full potential.

Methodology

This research was conducted via online surveys targeting software developers and decision-makers across the global software development industry. Respondents represented a mix of company sizes, including small teams with fewer than 50 employees, mid-sized companies and large enterprises. The survey reached professionals in diverse roles, with 55.5% holding leadership positions (CTOs, Tech Leads, CEOs, Project Managers), 24.3% working as hands-on engineers, and 20.2% from non-technical functions. Participants spanned 19 countries, with India (21.2%) and the United States (20.3%) representing the largest shares. The majority of respondents (64.5%) were senior-level specialists with over 8 years of experience. Survey findings were cross-validated against industry studies from Stack Overflow, McKinsey, and GitHub/Accenture to ensure alignment with broader trends in AI-assisted development.

1. Respondent Profile

Company Size

The survey respondents are mostly represented by small and mid-sized companies. Thus:

- 56.8% of responses came from mid-sized companies with 50-249 employees.

- 38.6% were small teams with fewer than 50 people.

- 4.5% of respondents represent large companies with 250 or more employees.

This sample reflects lean, growing tech teams. Still, the patterns generalize to enterprises: cross-checks with recent Stack Overflow and McKinsey studies show a similar trendline.

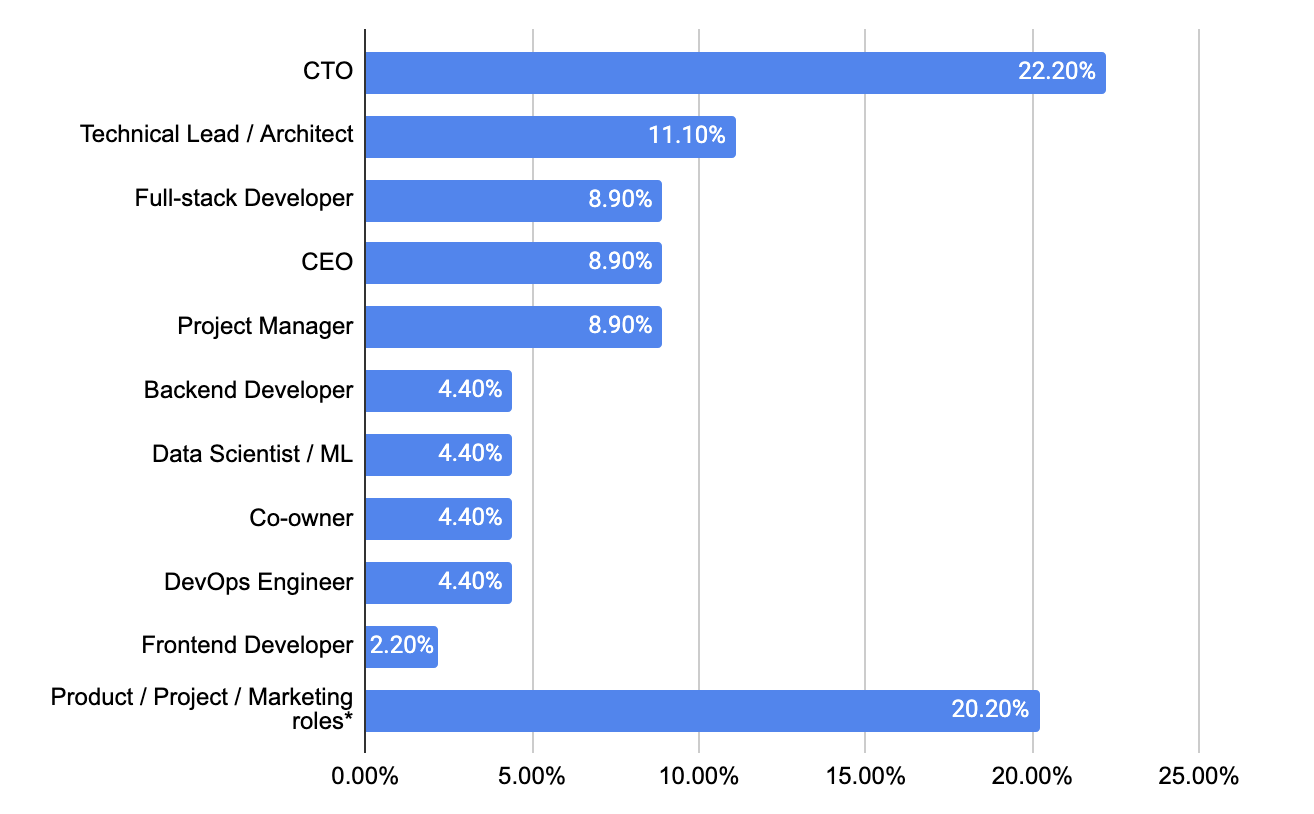

Respondent’s Role Within The Company

The survey reached a broad audience; however, the majority of respondents hold either senior-level positions or are decision-makers.

- CTO (22.2%) – a single most common role.

- Leadership and decision makers dominate with 55.5%. This group encompasses CTO (22.2%), Tech Lead/Architect (11.1%), CEO (8.9%), co-owners (4.4%), and Project managers (8,9%).

- Hands-on engineers are another notable group with 24.3%. They include full-stack developers (8.9%), backend developers (4.4%), frontend developers (2.2%), Data Scientists and Machine Learning engineers (4.4%).

- 20.2% came from a non-technical group, which includes Product Manager, Marketing, SEO, Digital Marketing Analyst, various director roles, HR, and DM Manager.

Most responses came from experienced tech leads and executives – the people who decide whether to adopt AI tools.

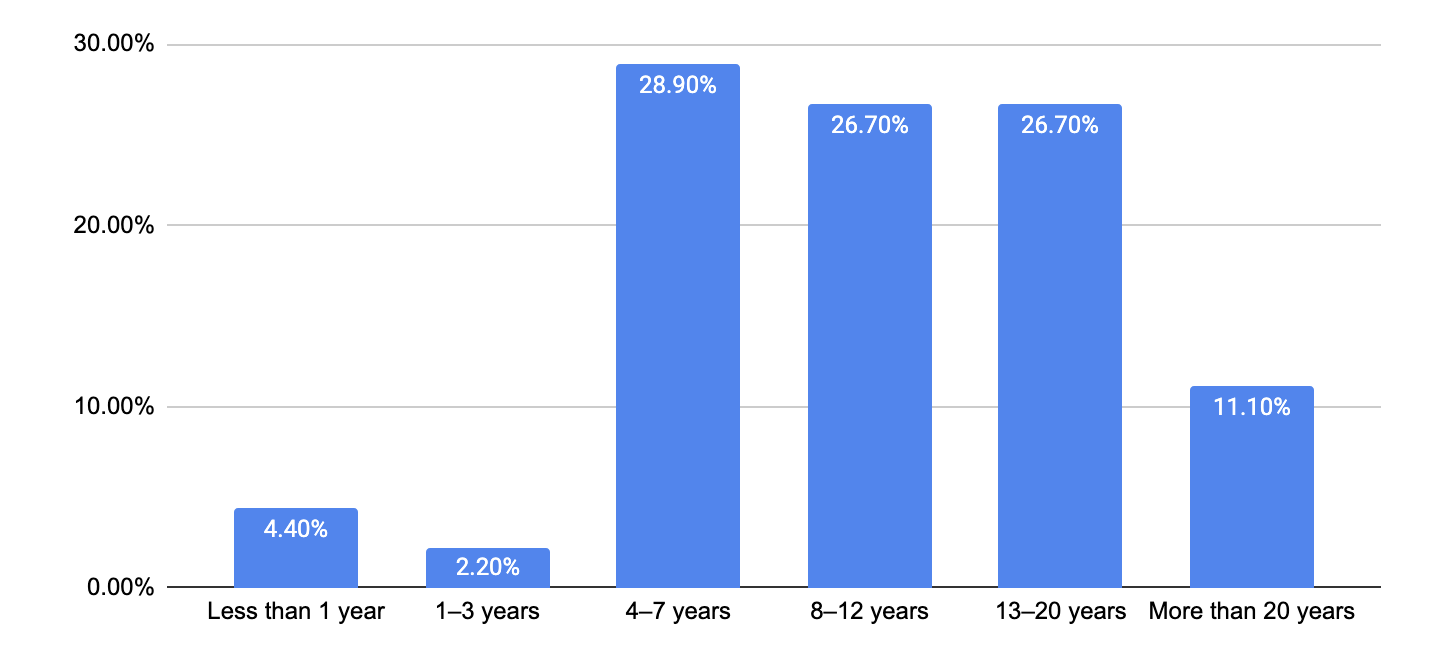

Years of Development Experience

The survey represents experienced professionals:

- 37.8% of respondents have between 13 and 20 years of experience.

- 55% of respondents have between 4 and 12 years of experience.

- Only a small fraction (6%) of respondents have less than three years of experience in their careers.

Overall, 64.5% of respondents are represented by senior-level specialists with over 8 years of experience and deep industry expertise.

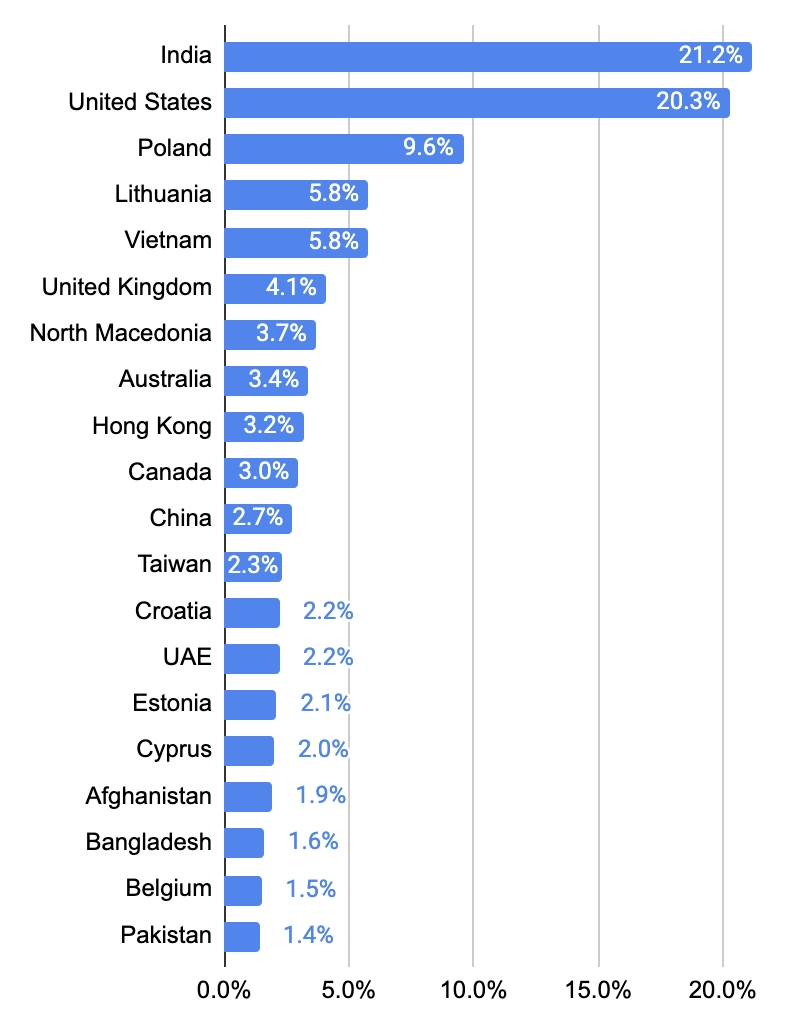

Office Location

The survey reached specialists across 19 countries, with clear regional leaders:

- India (21.2%) and the United States (20.3%) dominate, together accounting for over 40% of respondents.

- Other notable countries include Poland (9.6%), Lithuania (5.8%), and Vietnam (5.8%).

- 4.1% of respondents operate in the United Kingdom.

- The remaining Asia-Pacific and European countries each contribute small shares, together accounting for 33.2% of respondents.

Overall, the results show that AI-driven development practices are spreading globally, with the USA and India leading the way.

2. Tool Adoption & Usage Patterns

Key Takeaways:

- The AI tool market is highly concentrated, with ChatGPT dominating at 84% of respondents using it; Claude/Copilot/Cursor follow, each with usage rates exceeding 50%.

- AI is a day-to-day tool: 64% use AI daily, 20% use it weekly, and only 2% never use it.

- The primary use case is debugging and code generation. Around 60% of respondents use AI tools for these purposes.

- Support work is heavily automated: over 50% of respondents use AI for document generation and writing tests.

- Over 50% of respondents use AI to learn new technologies and frameworks.

- AI saves little time in refactoring, DB queries, architecture planning, and API integration.

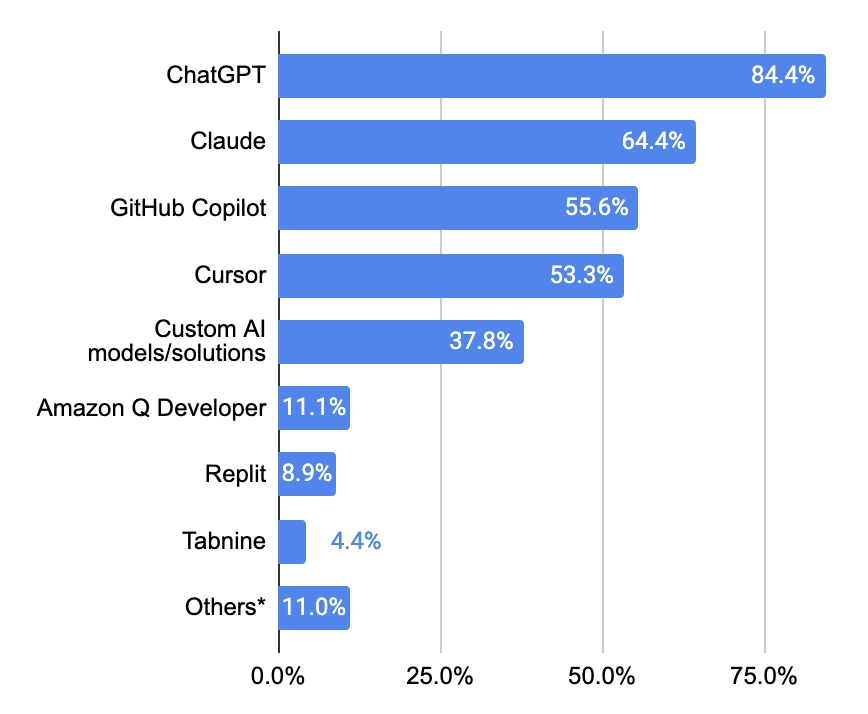

AI-Powered Development Tools That Are in Use

- ChatGPT is the de facto standard: 84.4% of respondents use it.

- A solid second tier: Claude 64.4%, Copilot 55.6%, Cursor 53.3%.

- 37.8% of responders run custom models.

- Niche tools have the lowest adoption rates: Amazon Q, 11.1%; Replit, 8.9%; Tabnine, 4.4%.

ChatGPT clearly dominates in AI-assisted development. However, the market has other strong players as well: Claude, Copilot, and Cursor represent a strong second tier, each used by more than half of developers.

About one in three respondents relies on custom AI models, indicating that internal experimentation with fine-tuned or proprietary systems is already widespread. Niche tools, such as Tabnine, Replit, and Amazon Q Developer, remain marginal, with adoption rates below 10%.

Overall, the use of AI for workflow automation is concentrated around a few major LLM ecosystems and IDE-integrated assistants.

Alignment With Other Research

Our findings on the use of AI for workflow automation align closely with broader industry studies. The StackOverflow 2025 survey also identified ChatGPT (82%) and Copilot (68%) as the leading AI tools among developers, confirming their mainstream status.

Reports from McKinsey and GitHub/Accenture show similar adoption levels, with enterprises increasingly building custom AI systems for internal use. The rise of tools like Claude in this dataset mirrors a wider trend toward multi-model workflows, where developers switch between reasoning-focused and code-integrated AI models.

The Frequency of Usage of AI Assistance in Development Work

- The majority, 64.5% of developers in the survey, rely on AI tools every day.

- 20% engage with AI at least weekly.

- 13.3% refer to AI tools at least a few times a month.

- Only a small minority of 2.2% of developers never use AI tools.

AI is now an everyday tool for developers; non-use is rare. AI has become a part of the development workflow.

Alignment With Other Research

This mirrors global trends: the 2025 Stack Overflow survey found that around 60% of developers use AI tools weekly or daily, and 75% plan to maintain or increase usage. Reports from GitHub and McKinsey show similar momentum, with many organizations embedding AI directly into their development environments.

Development Tasks Where Respondents Most Often Use AI

- Hands-on coding activities lead: debugging (62.2%) and code completion (57.8%) are the top uses. AI has become a near-real-time pair programmer, while AI-generated code has become the norm.

- Support work is heavily automated: document generation and writing tests account for 53.3% each, meaning AI is actively changing the way people handle routine and repetitive tasks.

- Learning new technologies holds the second place: over half (55.6%) use AI to learn new tech and frameworks.

- The quality layer is partly automated: AI code review assistance is applied by 40.0% of respondents, while code refactoring accounts for 33.3% of use cases.

- The communication layer isn’t seriously affected: AI is used for writing database queries and API integration in only 26.7% and 24.4%, respectively.

- System design remains human-led: architecture planning is lowest (17.8%), signaling limited trust in AI capability to make effective decisions.

AI is widely applied to coding-related activities and routine support tasks, such as document generation and test writing. AI has effectively become a de facto pair programmer. Highly context-related tasks, such as cross-system communication and architecture, remain human-led.

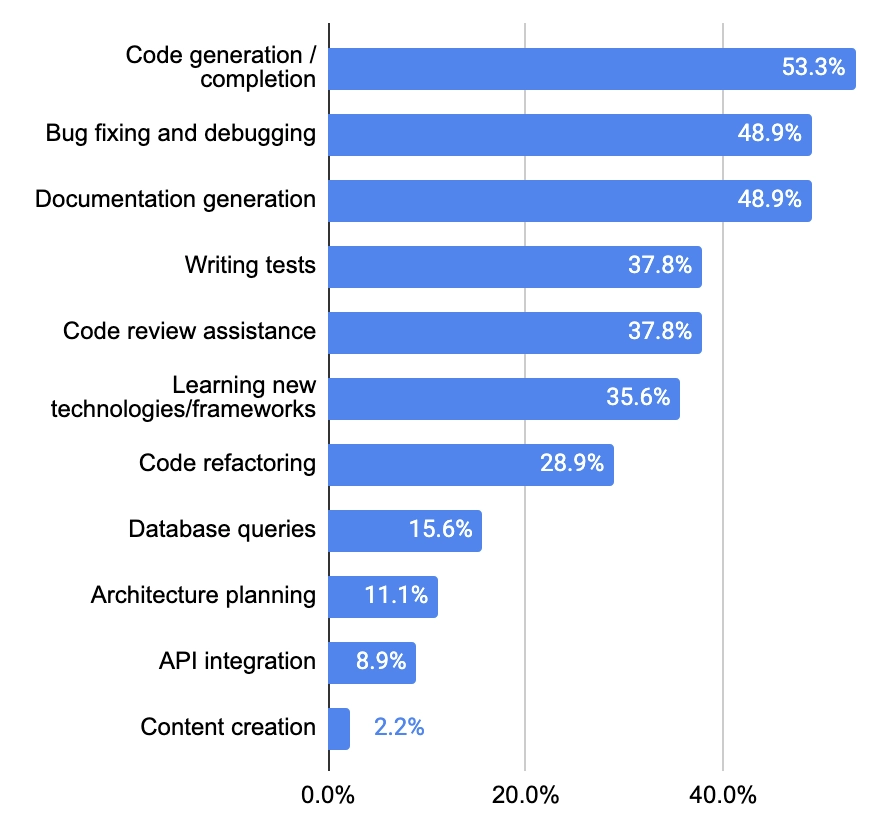

Where AI Saves The Most Time

Here, we asked respondents where they see the most time-saving benefits of implementing AI. What respondents actually feel faster at (top areas):

Top Time-Savers

- Code gen/completion (53.3%) and debugging (48.9%) – fast pair-programmer for everyday tasks.

- Documentation (48.9%) and tests (37.8%) – routine automation zones.

- Learning new tech (35.6%) – on-demand knowledge to shorten ramp-up.

Usage Efficiency Patterns

- There are two clear usage patterns – repeatable combinations of tasks that people delegate to AI.

- Development usage pattern: code generation paired with debugging and doc writing (often chosen together).

- Quality usage pattern: tests paired with code review to tighten feedback loops (often chosen together).

Where AI Saves Less Time

- Refactoring (28.9%) – needs human intent/context.

- DB queries (15.6%), architecture (11.1%), API integration (8.9%) – constrained by system knowledge and design trade-offs.

Cross-Question Analytics – “Use vs. Time Saved”

This chart shows a relative efficiency score for AI by task: time saved ÷ how often AI is used. The higher the value, the tighter the link between use and benefit.

Values near 1.0 indicate that almost everyone who uses AI for that task reports time saved; lower values indicate that it’s used often but delivers fewer perceived savings per use.

Effectiveness Analysis:

- Most efficient uses (≥0.9 efficiency): code generation/completion, and documentation writing. These are the core ROI engines of the AI development workflow.

- Good efficiency (>0.7 efficiency): bug fixing and debugging, writing tests, and code refactoring.

- Moderate efficiency (>0.6 efficiency): learning new tech (0.64) and architecture planning (0.62).

- Low-value use cases (<0.6 efficiency): API integration (efficiency 0.37) and DB queries (0.58) save little time relative to their frequency of use.

Complimentary Conclusions:

- Bug fixing is popular but leaky: high usage (62.2%) with middling efficiency (0.79).

- AI usage for refactoring is undervalued: it has low adoption (33.3%), but shows good efficiency (0.87).

- AI usage for learning is overvalued: used by 55.6% with moderate efficiency (0.64).

- DB queries & API integration – low adoption, low efficiency: used by 26.7% / 24.4%, efficiency 0.58 / 0.37.

- Emerging niche: content creation appears only in “time saved” (2.2%). Early experiments, not a mainstream dev task.

AI delivers the best ROI in everyday coding and docs; integration, DB work, and architecture lag. Refactoring looks underrated, while learning is popular but only moderately effective.

3. Perceived Value & Satisfaction

Key Takeaways:

- Respondents are primarily satisfied with AI: 77.8% satisfied with AI tools; dissatisfaction stays under 9%.

- Productivity rises: 84.5% report gains of implementing AI; 0% saw a significant drop.

- AI improves code quality: 64.4% report an improvement in code quality, compared to 8.9% who experience a worsening.

- Confidence in AI-generated code is cautious: 64.5% confident, only 17.8% very confident; 20.0% neutral.

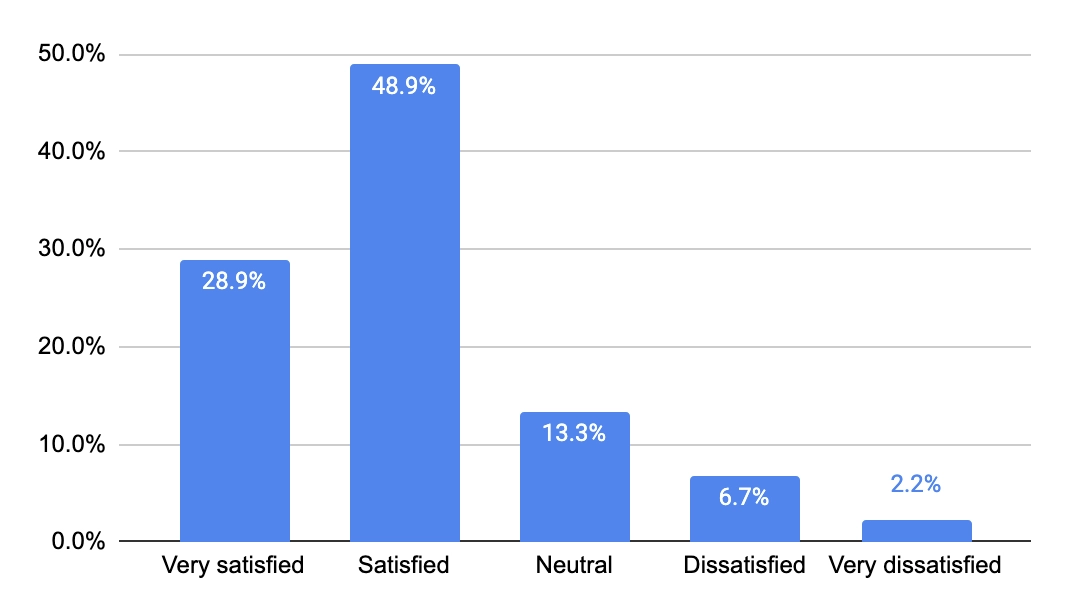

Satisfaction Rate With Current AI Development Tools

- Satisfied respondents comprise 77.8% (very satisfied 28.9% + satisfied 48.9%): a clear majority.

- Dissatisfied respondents are in the minority: 6.7% of dissatisfied and 2.2% of very dissatisfied.

- Satisfaction-to-dissatisfaction ratio is ≈ approximately 9:1, with roughly 9 out of 10 respondents being satisfied with AI tools.

- 13.3%: have a neutral attitude: a sizable undecided group. likely mixed results or limited exposure to AI tools.

Sentiment is clearly positive: satisfaction with AI-led automation dominates, showing a mature stage of adoption.

Developers now build pipelines that use AI for data integration and code generation.

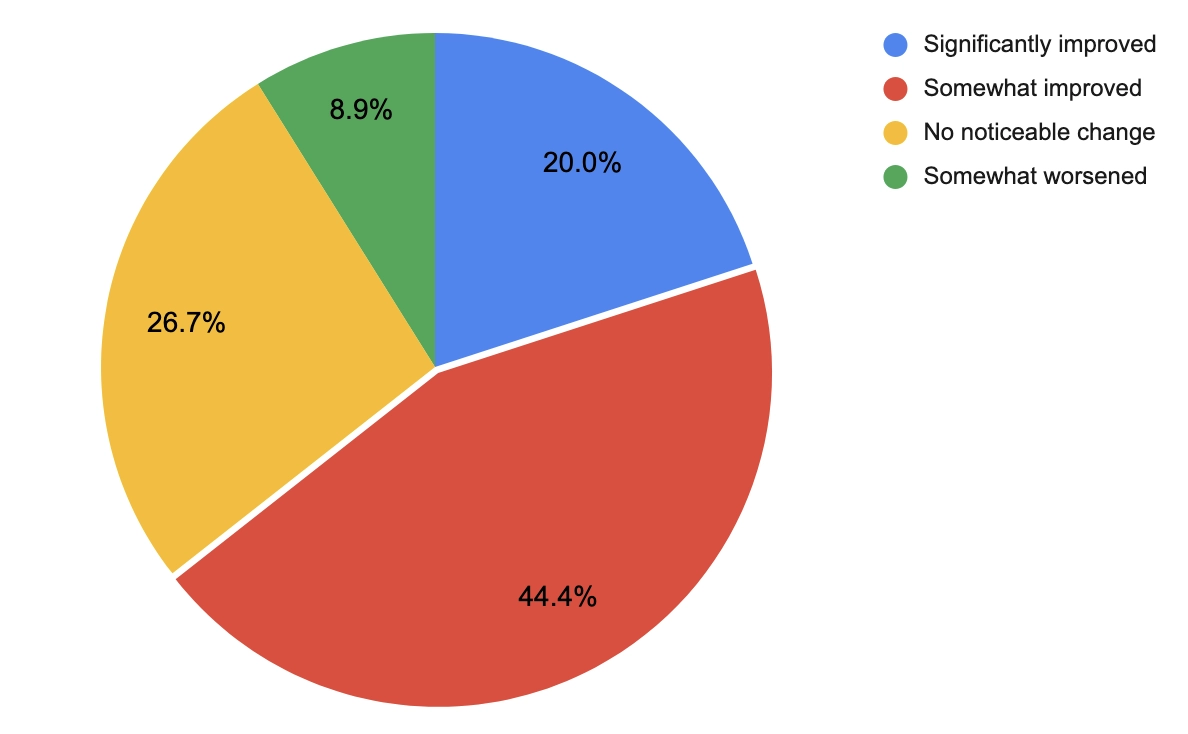

How Has AI Integration Changed Overall Development Productivity

- Clear productivity boost: 84.5% of developers report either a somewhat productivity gain (48.9%) or a significant gain (35.6%) of implementing AI for workflow automation.

- No noticeable change: 11.1% of adopters report no noticeable change in productivity, implying that they managed to implement AI tools, but didn’t get the expected benefits from them.

- Minimal negative outcomes: 4.4% report decreased productivity, suggesting that integration overhead or trust issues are minor concerns.

- Noticeably, 0% of respondents report that AI significantly decreased development productivity.

Over 80% of respondents see a measurable positive impact on the coding process after implementing AI for workflow automation. This implies the AI capability to provide consistent benefits to businesses.

Change In Code Quality With AI

- 64.4% say code quality improved (20.0% significantly, 44.4% somewhat).

- 26.7% see no change.

- 8.9% say quality worsened (none significantly).

Most teams report better code quality, while harm is rare, and a quarter see no change. This suggests AI is not just speeding delivery – it’s helping catch bugs earlier, standardize code, and enforce best practices across teams.

Confidence in AI-Generated Code

- Confidence in AI-generated code accuracy is 64.5%: “very confident” with 17.8% + “somewhat confident” with 46.7%.

- 15.5% of respondents express doubts in AI accuracy and reliability: 13.3% are somewhat unconfident, and 2.2% are not confident at all.

- The ratio of confidence to unconfidence ≈ is approximately 4.2:1, indicating that trust clearly outweighs doubt.

- 20.0% stay neutral – likely reflecting mixed experiences or limited exposure.

Trust in AI accuracy and reliability clearly outweighs doubt, but strong trust is limited, with only 17.8% feeling “very confident.” Hard skepticism is rare (2.2% showed no confidence).

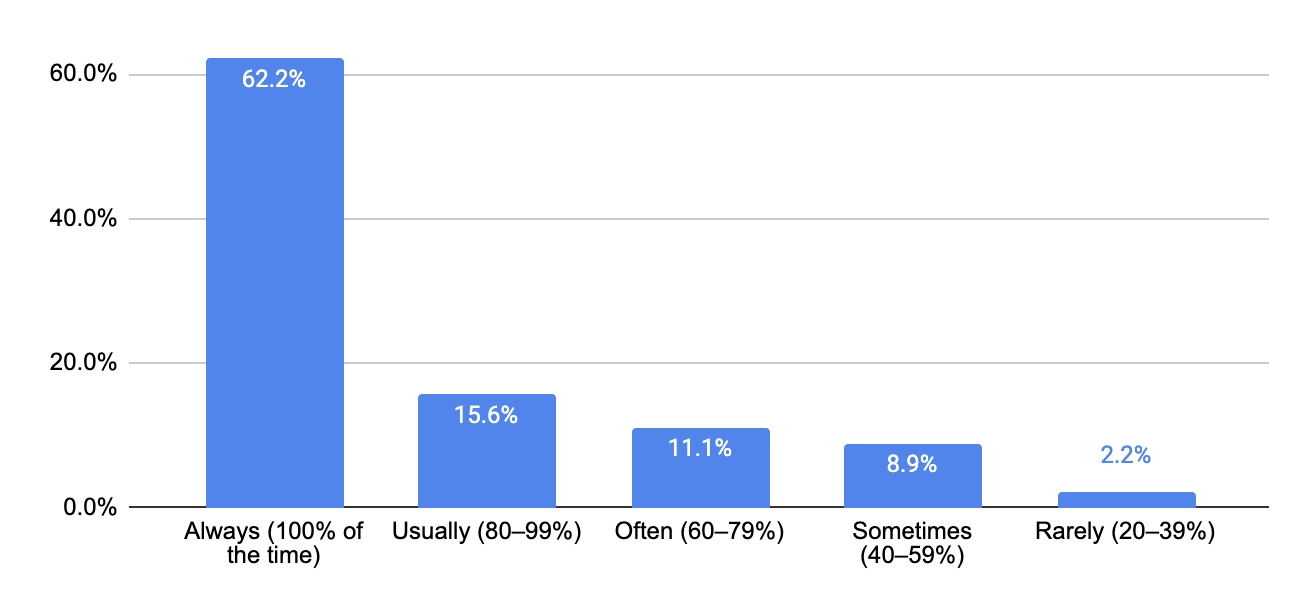

4. Code Verification & Errors

Key Takeaways:

- AI-generated code verification is the norm: 62.2% always check AI code, and 15.6% usually do – ≈78% practice near-constant validation.

- The most used verification practice is manual review (77.8%).

- Top error: logic errors (62.2% mentioned it) – code runs, but does the wrong thing.

- Security risk is real: 46.7% of developers encounter issues in AI-generated code that can expose security holes.

- Project fit of AI code is a problem: 44.4% see incompatibility of AI code with the existing codebase.

- AI code usually takes longer to verify than hand-written code: 35.6% finish AI-code verification faster than the one written from scratch, but 64.4% spend more time.

- Bugs in AI code are expected: 97.8% hit issues at least occasionally; 22.2% do so often/very often – verification remains a built-in step.

Verification Frequency of AI-Generated Code

- AI-generated code verification is the norm: 77.8% check code at least 80% of the time.

- The majority always verify AI code: 62.2% of respondents verify AI code in 100% cases.

- Low-frequency verification is rare: only 11.1% verify <60% of the time (sometimes, rarely); 2.2% rarely.

AI code is treated as a draft – code verification is a built-in step, not optional. The majority of respondents verify AI-generated code in 100% cases. Teams don’t trust AI output by default and build review into every workflow.

Methods Used to Verify AI-Generated Code

We discovered that code verification is the standard. The next question is how teams actually verify the AI-generated code.

- Human-first: manual code review leads (77.8%). Peer reviews are much lower (18.8%), suggesting reviews are often solo, not shared.

- Teams run tests first; static analysis and security checks come later. Automated testing is the most common approach, used by 48.9%, while static analysis (17.5%) and performance benchmarking (16.5%) lag behind.

- Documentation is not a popular code verification tool: only 26.7% of respondents check the code against existing docs.

- Surprisingly, 22.2% of respondents use cross-referencing with multiple AI tools as a verification method.

- Security is underused: security scans (21.0%) and runtime monitoring/logging (19.0%) are not the default, despite frequent AI-related issues.

A pyramid of trust, with human eyes at the top, tests in the middle, and specialized gates (security/perf/static/runtime) forming a narrow base. AI development workflow requires humans participating to show good results.

Common Errors Found in AI-Generated Code

- Top problem: logic errors (62.2%) – code runs but does the wrong thing.

- Security risks (46.7%) – unsafe patterns show up often.

- Compatibility with the existing codebase (44.4%) – doesn’t fit project conventions or versions; breaks types/imports, ignores module boundaries.

- Incorrect API usage (33.3%) – wrong endpoints/params/auth, ignores rate limits and error codes, mishandles pagination/retries, or uses outdated SDK calls.

- Poor code structure (33.3%) – long, tangled functions, duplicated code, weak separation of concerns, unclear naming; hard to test and maintain.

- Syntax is not the main issue (31.1%) – compile failures occur less frequently than behavior failures.

- Reliability gaps (28.9%) – performance and missing error handling.

- Outdated practice usage (20.0%) – deprecated methods creep in.

- Truly rare: “can’t answer” (2.2%), unnecessary code (2.2%).

Most AI code problems are behavioral and contextual, such as logic errors, security risks, and a poor fit with the existing codebase, rather than simple syntax mistakes. Teams should lean on stronger tests, API contracts, and security/static checks to catch what linters miss.

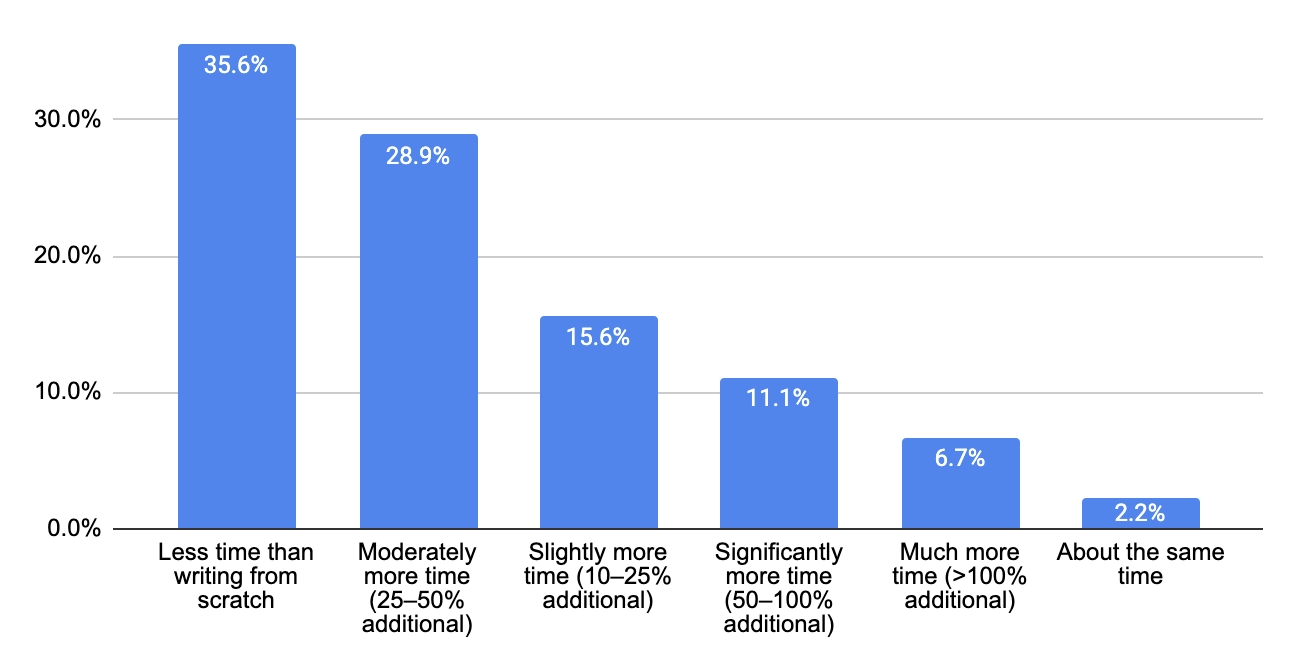

Additional Time Spent on Verifying AI-Generated Code

Another major question is the time and effort required to verify the AI-generated code. Does it take a long time to verify AI code? Is it easier to verify the human-written code than the AI-generated code? We asked respondents if implementing AI had changed the time spent on code verification.

- 35.6% – code verification is faster than writing from scratch. Likely cleaner, more readable AI code with fewer fixes and good project fit enables quicker code review.

- 15.6% – slightly longer (+10-25%). Some edits are needed, but no major rework is required.

- 28.9% – noticeably longer (+25-50%). Frequent logic fixes; AI gets the gist, misses details.

- 11.1% – much longer (+50-100%). Many code mismatches; deep rework required.

- 6.7% – far longer (>100%). AI output is more of a sketch; real code is rewritten.

- 2.2% – about the same time. Neutral effect: neither faster nor slower.

About a third (35.6%) of respondents verify AI code more quickly than writing it from scratch. The remaining 64.4% require more time for the AI code check. Roughly a fifth (~18%) face heavy overruns (+50-100% or more), making verification the bottleneck.

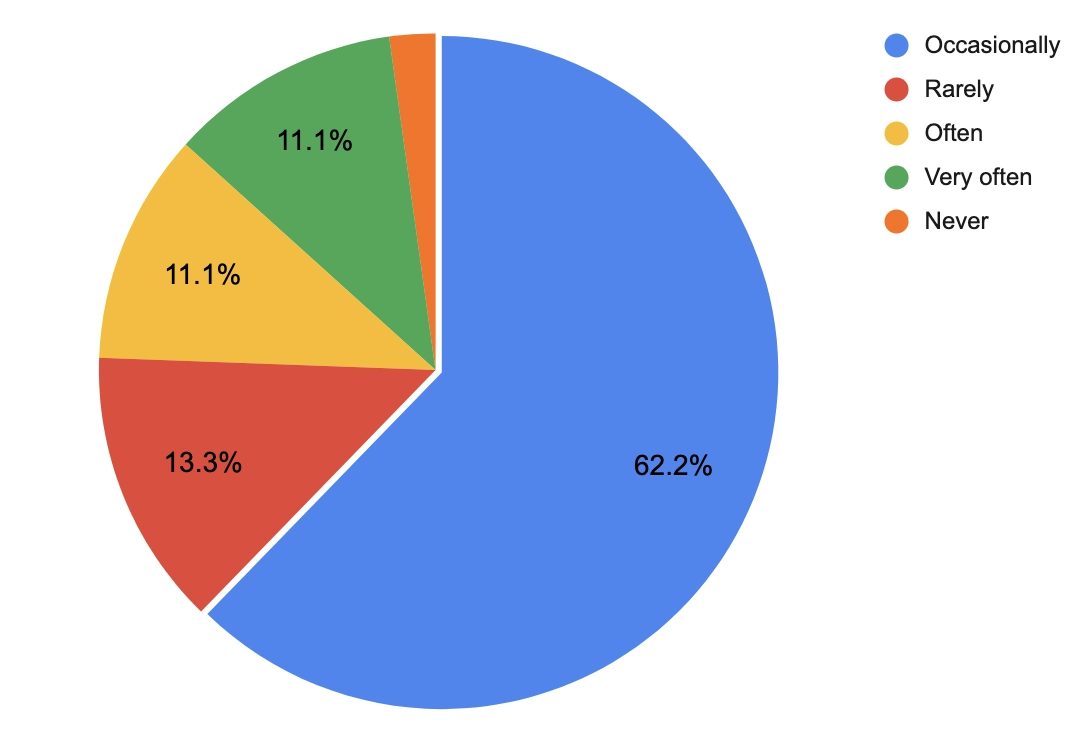

Frequency of Errors or Vulnerabilities in AI-Generated Code

- Bugs are expected: only 2.2% of respondents have never faced bugs and vulnerabilities.

- 62.2% of users occasionally encounter bugs and vulnerabilities.

- 22.2% deal with bugs frequently (often and very often, each with 11.1%), so one in five works under a repeated correction load.

- 13.3% meet problems rarely – a smaller, lower-friction cohort.

Zero-defect output is unrealistic. AI code errors are routine – usually occasional but frequently spiking in some areas.

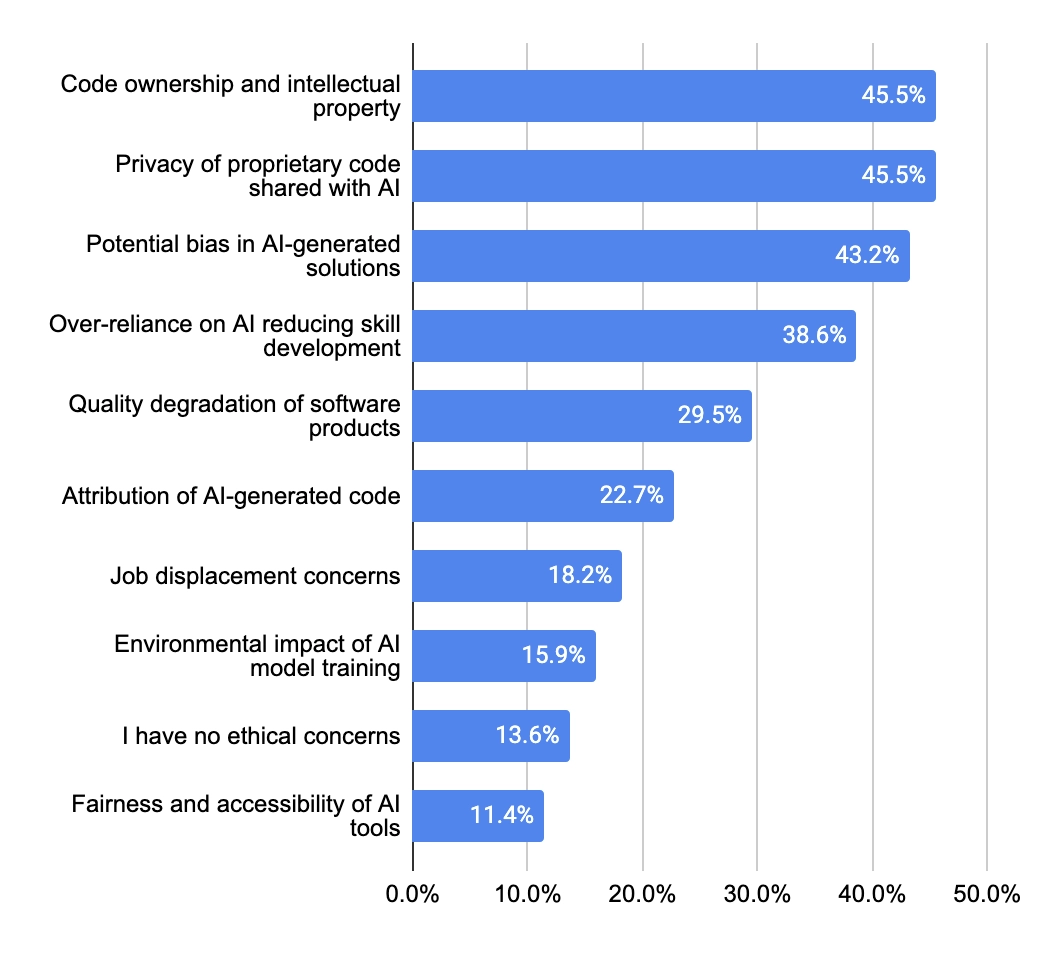

5. Ethical & Legal Concerns

Key Takeaways:

- Ethical questions are widespread: 4 out of 5 developers have faced at least one ethical dilemma when using AI, indicating that ethics is now a regular part of software development.

- Main concerns are legal and data-related: IP rights and code privacy (both 45.5%) dominate concerns, showing that teams fear legal exposure more than abstract ethics.

- AI bias is the next major worry (43.2%), reflecting growing attention to fairness and trust in AI outputs.

- Skill erosion and product quality risks follow: 38.6% of respondents fear skill erosion among developers, and 29.5% expect potential drops in product quality.

- Few care about the authorship issue or job loss: only 22.7% raise authorship issues; 18.2% mention job displacement. These topics stay in the background.

- Environmental and fairness concerns remain niche (15.9% and 11.4%), showing that developers focus more on daily operational ethics than global impact.

- Disclosure of AI use lacks consistency: 40% disclose occasionally, 38% rarely, and only 2% do it frequently.

- One in five companies never disclose their use of AI, exposing a potential governance blind spot where company or client policies are unclear.

- Concern about copyrighted training data is very high (84.5%), and nearly half (46.7%) are very concerned – developers simply don’t trust current vendor assurances.

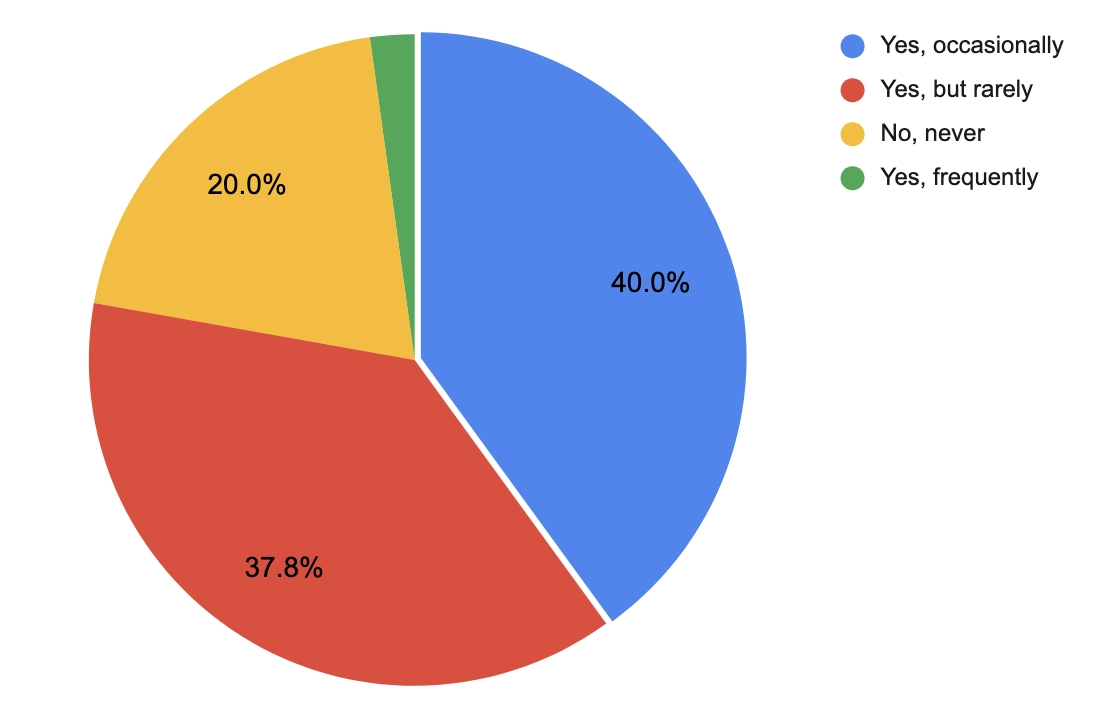

Ethical Dilemmas in Using AI for Coding Assistance

- 80.0% have faced dilemmas at least once (occasionally + rarely + frequently), making ethics a routine consideration rather than an edge case.

- Ethical incidents in using AI for coding are mostly episodic: 40.0% of respondents reported “occasionally” facing ethical dilemmas, 37.8% “rarely.”

- Only 2.2% report frequent issues – a small but high-risk segment that likely needs tighter policies.

- 20.0% never encounter dilemmas, suggesting either constrained AI usage or well-guarded workflows.

Most teams encounter ethical dilemmas with AI at least occasionally (~80%), but frequent issues are rare (2.2%). It doesn’t appear that AI integration will be banned or halted due to ethical concerns. This likely points to routine, low-intensity friction that’s best handled with clear, lightweight guidelines (e.g., disclosure and source/data rules), not bans.

Key Ethical Concerns About AI-Assisted Coding

The next question that logically follows is what these ethical concerns are about. Ethical concerns refer to doubts about whether AI usage infringes on someone's rights or harms individuals, employees, or businesses. Here is what we found:

- Legal and privacy risks lead (both 45.5%) – these are two of the most acute questions: privacy of proprietary code shared with AI and the intellectual property issue.

- Potential bias in AI-generated solutions takes the close third place with 43.2%.

- Over-reliance on AI in development bothers 38.6% of respondents. Over-reliance may lead to serious skill erosion.

- 29.5% of respondents worry about product quality degradation over time.

- Attribution of AI-generated code is secondary (22.7%) – attribution here refers to the responsibility for AI-generated code. And it’s not a primary concern for most respondents.

- Job displacement concerns (18.2%) and bad environment impact (15.9%) are second-tier concerns.

- 13.6% report no concerns – a clear minority.

- 11.4% worry about the fairness and accessibility of AI tools.

Teams worry first about getting sued or leaking code (IP & privacy at the top), with bias close behind.

Disclosure of AI Tool Usage to Clients or Employers

This question is about transparency in using AI. Do developers disclose their use of AI to clients and employees?

- Disclosure is selective, not routine: 40.0% of respondents disclose occasionally; 37.8% disclose rarely.

- Full transparency is uncommon: only 2.2% disclose frequently.

- By default, transparency is rare, with only 2.2% of respondents saying they experience it “frequently.”

- One in five never disclose: 20.0% report no disclosure at all.

- 4:1 ratio of disclosure vs. non-disclosure (80.0 : 20.0) – practice exists, governance lags.

Most teams treat disclosure as situational – likely based on client sensitivity, code criticality, or contract terms.

Concern Over AI Tools Trained on Copyrighted Code

In the research, we examined one specific concern: the concern over AI tools trained on copyrighted code. Here are the results:

- Concern is widespread: 84.5% express some level of concern (very/somewhat/slightly).

- 46.7% of respondents reported being very concerned about training on copyrighted code.

- Neutral attitude is reported by 13.3% of respondents.

- Dismissal is rare: only 8.9% are unconcerned (6.7% slightly, 2.2% not at all).

Most developers are genuinely worried about copyrighted training data: concern is the norm, not the exception.

Despite AI vendors' promises to the contrary, trust remains low because training datasets are opaque, proofs are difficult to verify, licensing rules are complex, and lawsuits keep the legal risk visible - so developers assume that “training might still take place on my data.”

Conclusion

Let’s draw the big picture of the AI impact on the development workflow:

- Developers no longer test AI tools – they depend on them. Over 80% of users utilize AI daily, 85% report productivity gains, and nearly two-thirds experience improved code quality. AI is now embedded in the development workflow, automating documentation, debugging, testing, and knowledge discovery.

- Yet the trust is cautious. Nearly all teams verify AI code manually, and 64% report that verification takes as long or longer than writing code from scratch. AI works best as a fast, tireless assistant – not an autonomous coder.

- Ethical and legal risks have become mainstream. Four out of five developers face dilemmas around IP, privacy, and bias. Most disclose AI use inconsistently, and 85% worry about copyrighted training data. The governance gap is evident: adoption outpaces policy.

So what can software development companies do with this data today to get the maximum benefits from the AI implementation?

Practical Recommendations From Techreviewer

1. Software development firms should first formalize their AI governance.

Clear internal rules must define which tools are approved, how data is handled, and when disclosure to clients is required. Transparency should become the norm.

Why? This ensures compliance, protects intellectual property, and builds client trust in AI-enabled processes.

2. Next, integrate verification directly into the workflow.

AI-generated code should always be treated as a draft that goes through human review, automated tests, and static checks. Continuous integration systems should automatically validate AI output to reduce manual overhead.

Why? This approach maintains quality and safety as AI becomes a standard part of development.

3. Companies should also focus on AI use where it delivers a measurable return on investment.

The greatest gains come from code generation, debugging, and documentation. In contrast, tasks such as architecture planning, API integration, and database work still demand human judgment and context.

Why? Concentrating AI on high-yield areas maximizes efficiency without sacrificing control or accuracy. Applying AI in areas where it shows poor results might lead to serious, unnecessary costs.

4. To sustain long-term benefits, teams need continuous upskilling.

Developers must learn how to communicate effectively with AI tools, critically evaluate AI suggestions, and maintain clean, maintainable code. Balancing AI-assisted and manual work helps prevent skill degradation and over-reliance on automation.

Why? This keeps human expertise sharp and ensures AI remains an enhancer, not a replacement.

5. Finally, ethics and risk management require proactive attention.

Firms should monitor how AI systems handle proprietary data, identify potential bias, and ensure intellectual property compliance. Transparent records of AI contributions and regular audits will help maintain trust and accountability as AI takes on a larger role in software development.

Why? Addressing ethical risks early prevents reputational damage and regulatory exposure later.

Looking ahead, we will definitely run another survey next year. We expect to see the shift from “use AI” to “run it as an independent agent”. We believe that agentic workflows that open PRs end-to-end, verification baked into CI as policy, and AI-based operating systems that can independently write, run, verify, debug, and even showcase software are essential.

Considering the pace of AI development and our experience with it, our vision could become a reality within the next year.

Some of the companies that participated in the survey:

Talmatic, Ekotek, Sigli, Spotverge, CleverDev Software, Saritasa, Naveck Technologies, SHIFT ASIA, Timspark, Mallow Technologies, iGuru Software, Fora Soft, Verdantis, Biz4Group, Codebrit Digital, Intertec, Simplico, Truenorth Digital, Wizard Labs, Co-Ventech, Chudovo, Sysmo, Cabot Technology Solutions, Lantern Digital, 2N Consulting Group, Graphiters, launchOptions, QArea, Master of Code Global, Appar Technologies, Scand Poland, Bizople, Usetech, Eagle IT Solutions